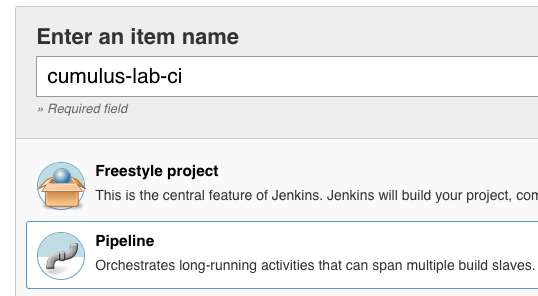

I wanted to make my life a bit easier and created a simple Jenkins pipeline to spin-up the AWS instance and deploy OpenShift. Read my previous article: Deploying OpenShift 3.11 Container Platform on AWS using Terraform. You will see in between steps which require input to stop the pipeline, and that keep the OpenShift cluster running without destroying it directly after installing OpenShift. Also check out my blog post I wrote about running Jenkins in a container with Ansible and Terraform.

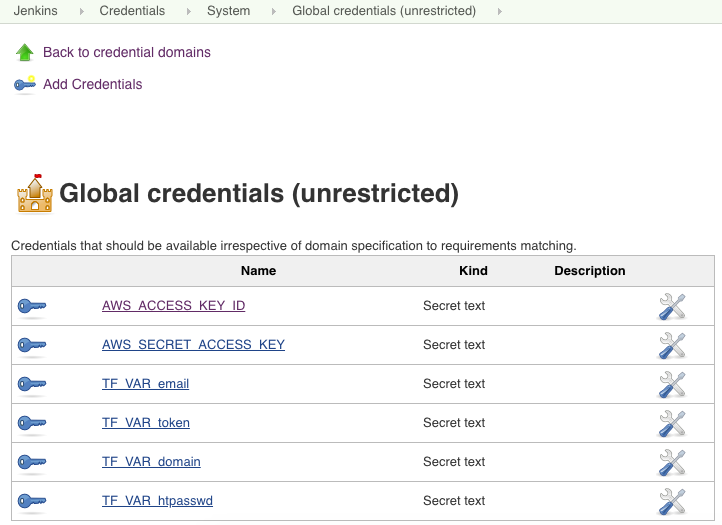

The Jenkins pipeline requires a few environment variables for the credentials to access AWS and CloudFlare. You need to create the necessary credentials beforehand and they get loaded when the pipeline starts.

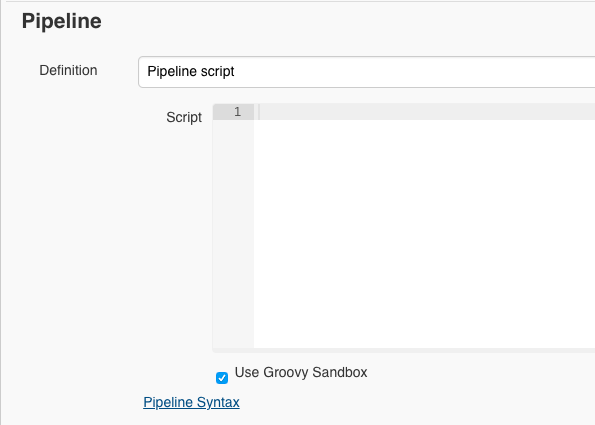

Here are the pipeline steps which are self explanatory:

pipeline {

agent any

environment {

AWS_ACCESS_KEY_ID = credentials('AWS_ACCESS_KEY_ID')

AWS_SECRET_ACCESS_KEY = credentials('AWS_SECRET_ACCESS_KEY')

TF_VAR_email = credentials('TF_VAR_email')

TF_VAR_token = credentials('TF_VAR_token')

TF_VAR_domain = credentials('TF_VAR_domain')

TF_VAR_htpasswd = credentials('TF_VAR_htpasswd')

}

stages {

stage('Prepare workspace') {

steps {

sh 'rm -rf *'

git branch: 'aws-dev', url: 'https://github.com/berndonline/openshift-terraform.git'

sh 'ssh-keygen -b 2048 -t rsa -f ./helper_scripts/id_rsa -q -N ""'

sh 'chmod 600 ./helper_scripts/id_rsa'

sh 'terraform init'

}

}

stage('Run terraform apply') {

steps {

input 'Run terraform apply?'

}

}

stage('terraform apply') {

steps {

sh 'terraform apply -auto-approve'

}

}

stage('OpenShift Installation') {

steps {

sh 'sleep 600'

sh 'scp -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -r ./helper_scripts/id_rsa centos@$(terraform output bastion):/home/centos/.ssh/'

sh 'scp -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -r ./inventory/ansible-hosts centos@$(terraform output bastion):/home/centos/ansible-hosts'

sh 'ssh -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -l centos $(terraform output bastion) -A "cd /openshift-ansible/ && ansible-playbook ./playbooks/openshift-pre.yml -i ~/ansible-hosts"'

sh 'ssh -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -l centos $(terraform output bastion) -A "cd /openshift-ansible/ && ansible-playbook ./playbooks/openshift-install.yml -i ~/ansible-hosts"'

}

}

stage('Run terraform destroy') {

steps {

input 'Run terraform destroy?'

}

}

stage('terraform destroy') {

steps {

sh 'terraform destroy -force '

}

}

}

}

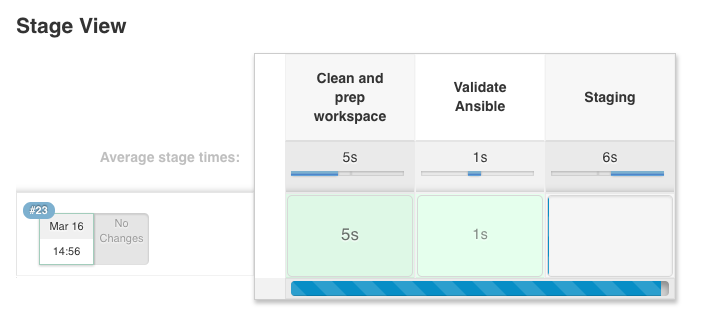

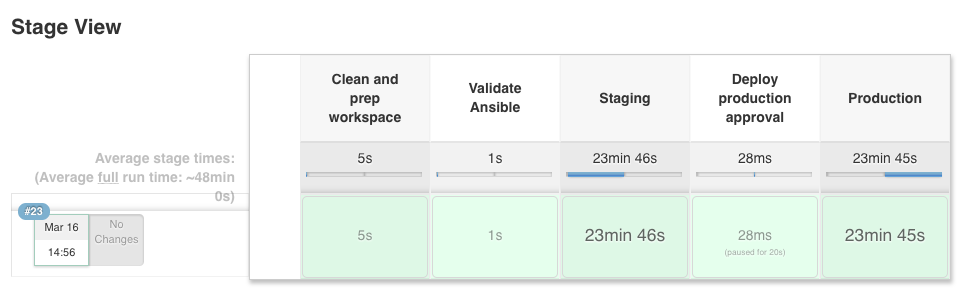

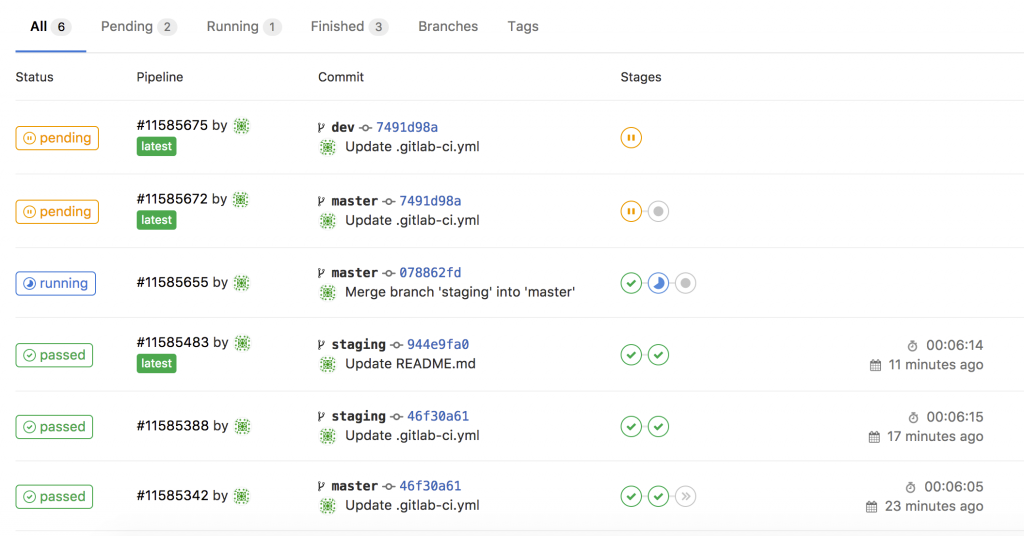

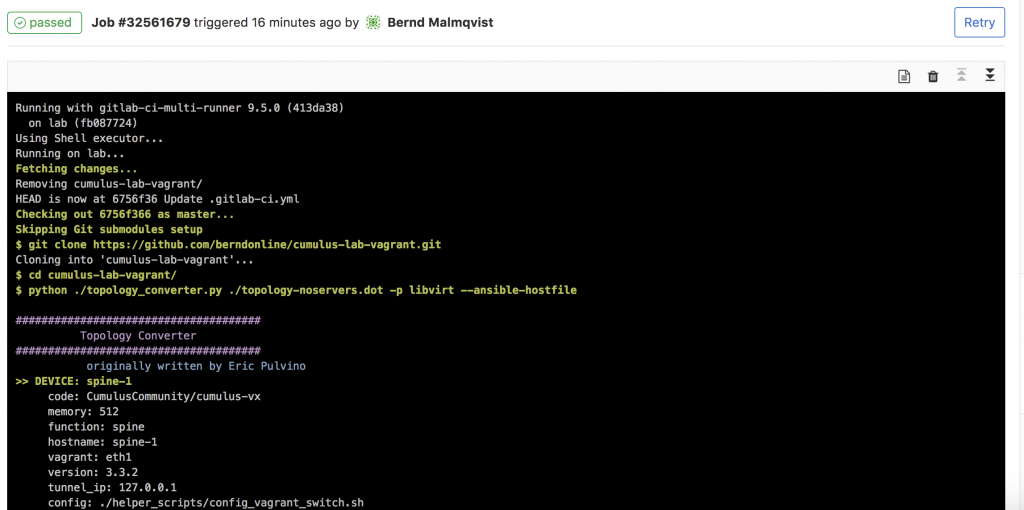

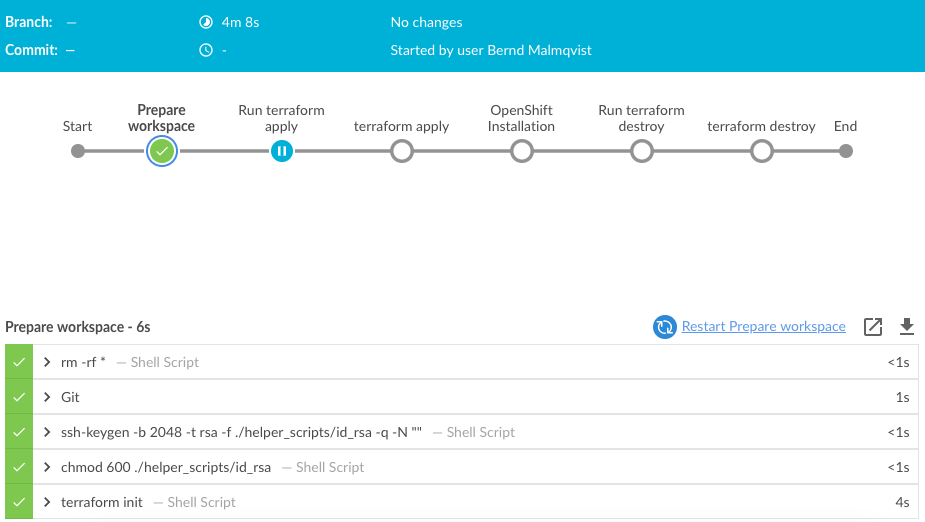

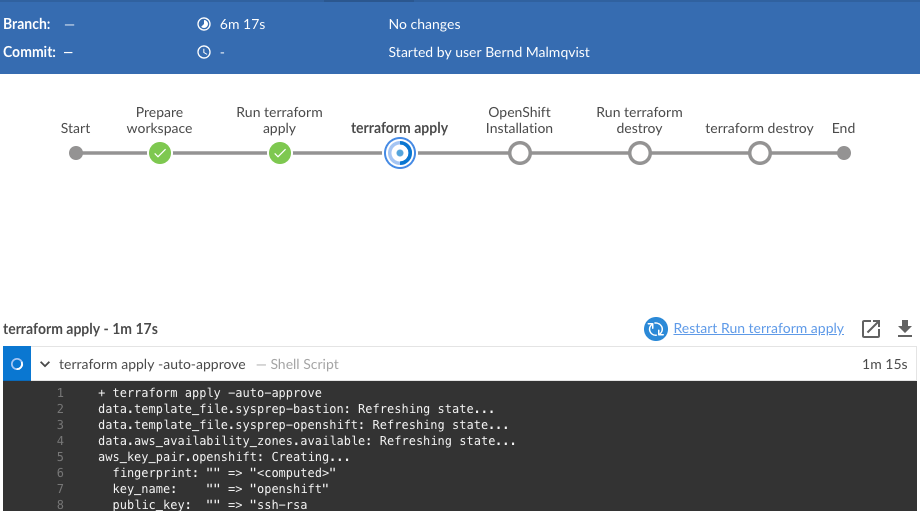

Let’s trigger the pipeline and look at the progress of the different steps.

The first step preparing the workspace is very quick and the pipeline is waiting for an input to run terraform apply:

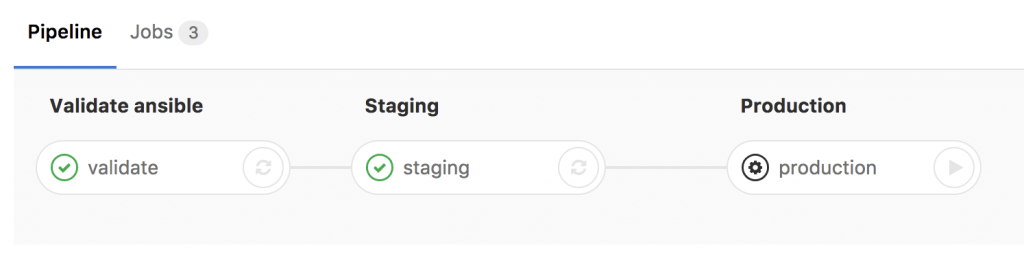

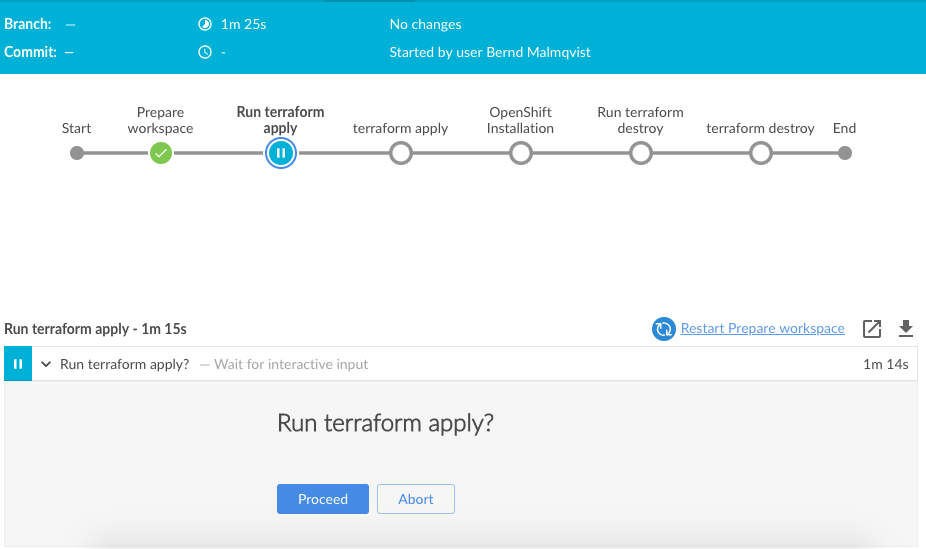

Just click on proceed to continue:

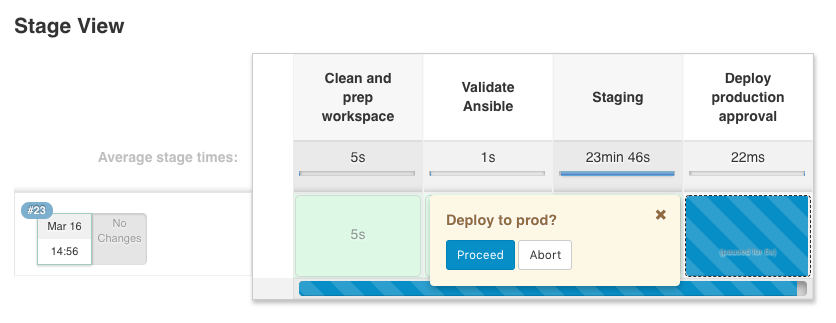

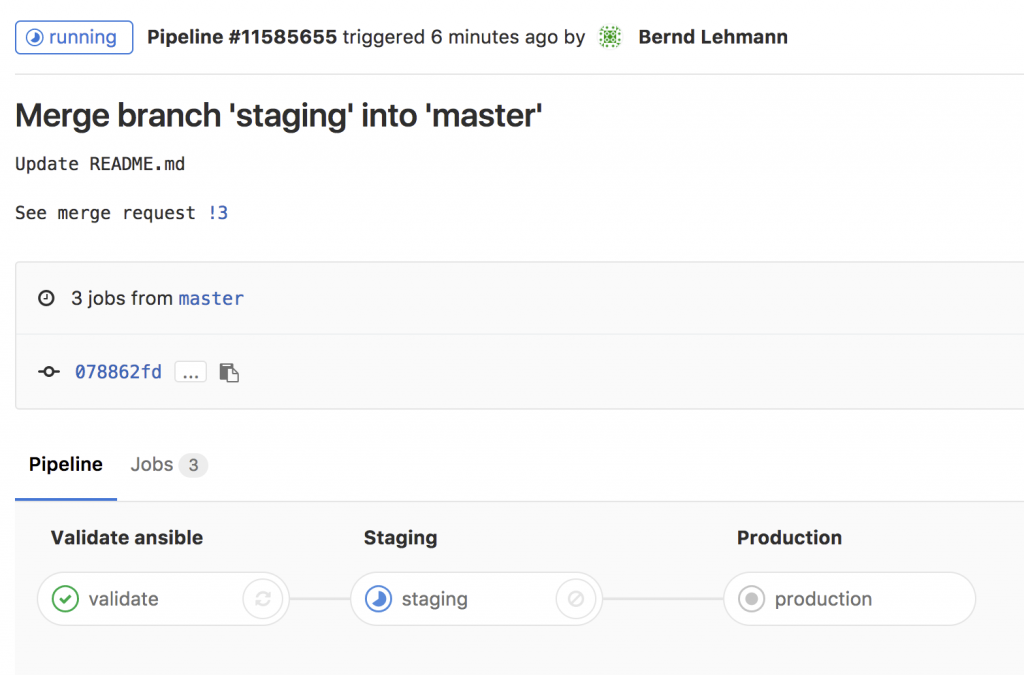

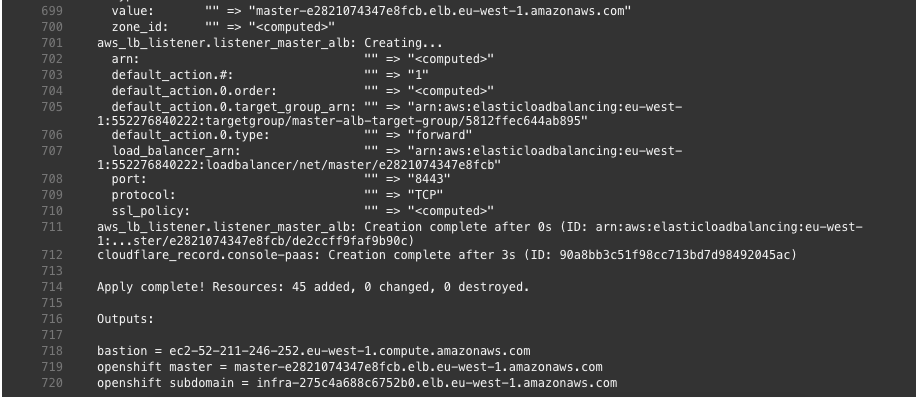

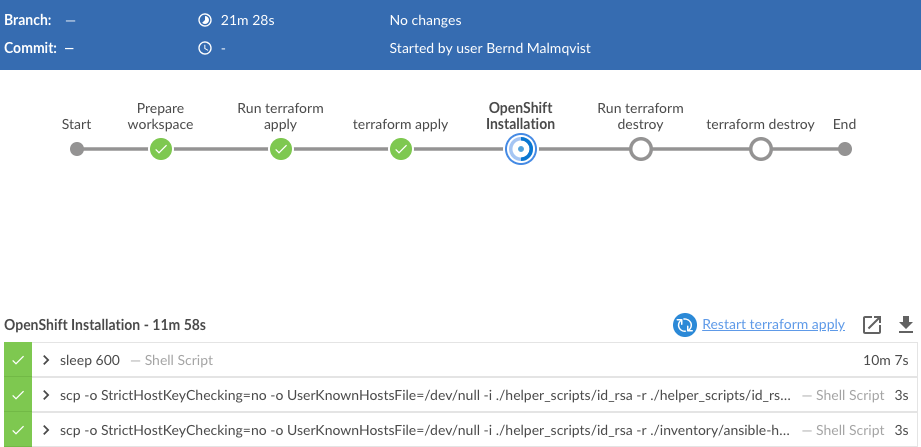

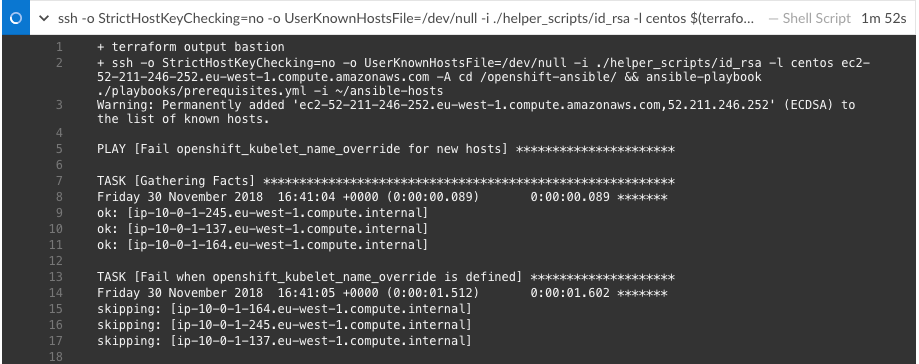

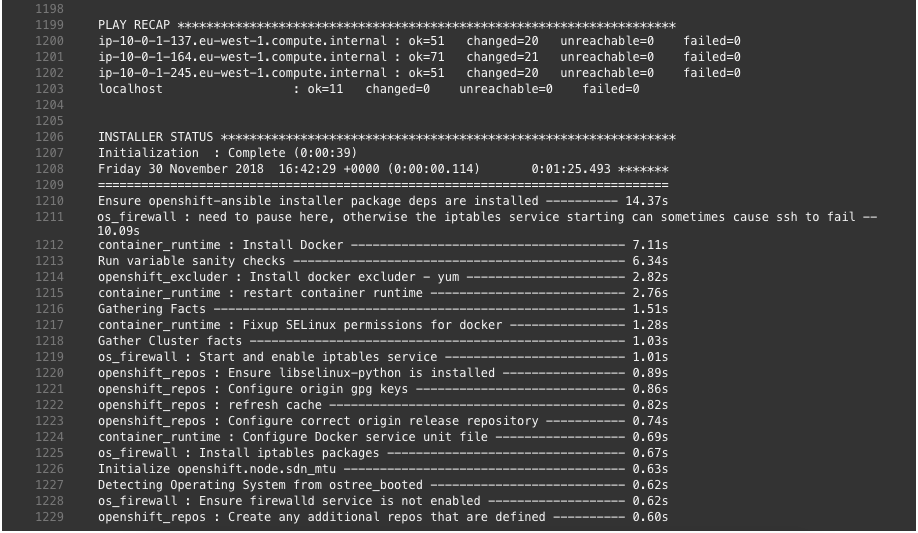

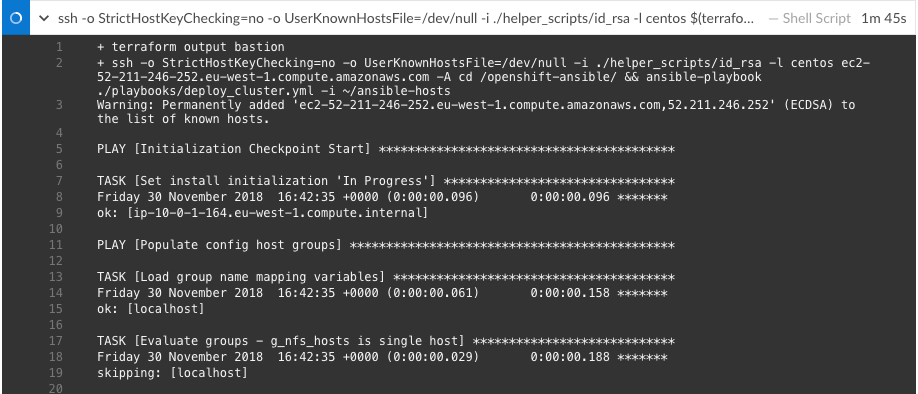

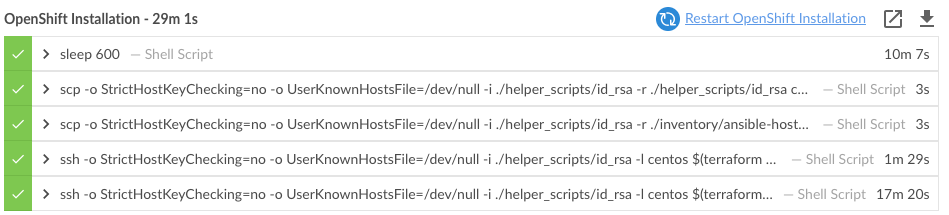

After the AWS and CloudFlare resources are created with Terraform, it continues with the next step installing OpenShift 3.11 on the AWS instances:

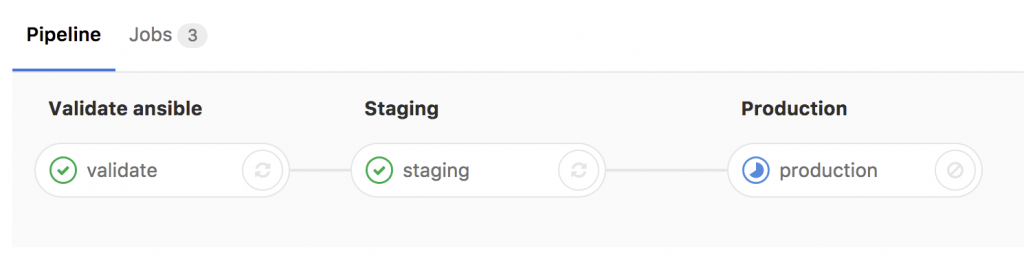

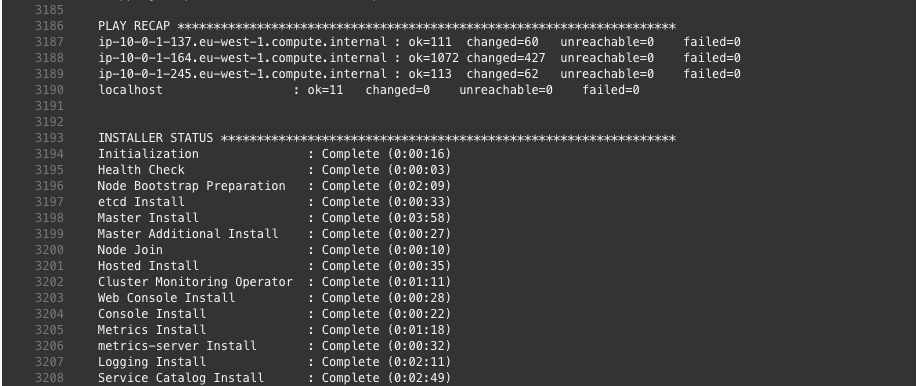

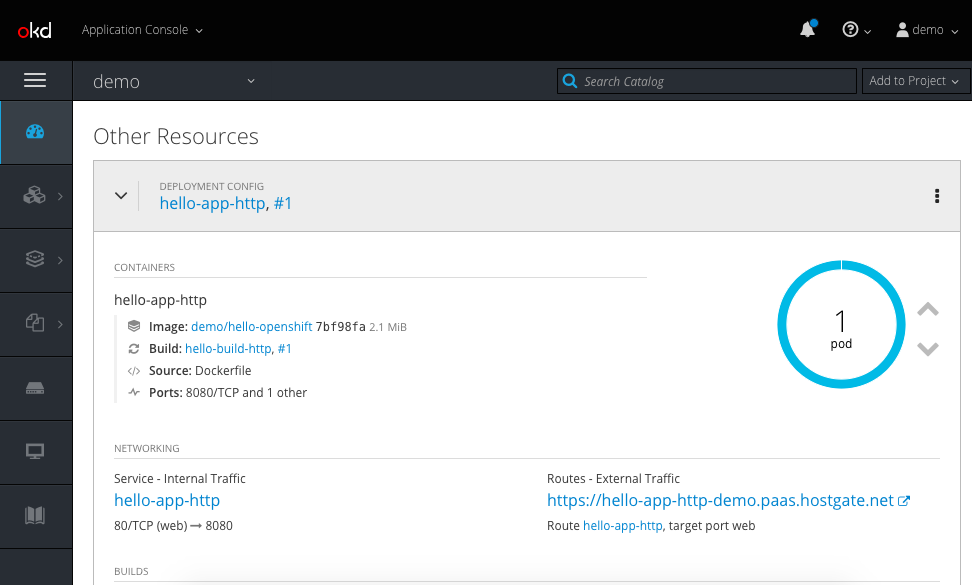

By this point the OpenShift installation is completed.

You can continue and login to the console-paas.. and continue doing your testing on OpenShift.

Terraform not only created all the AWS resources it also configured the necessary CNAME on CloudFlare DNS to point to the AWS load balancers.

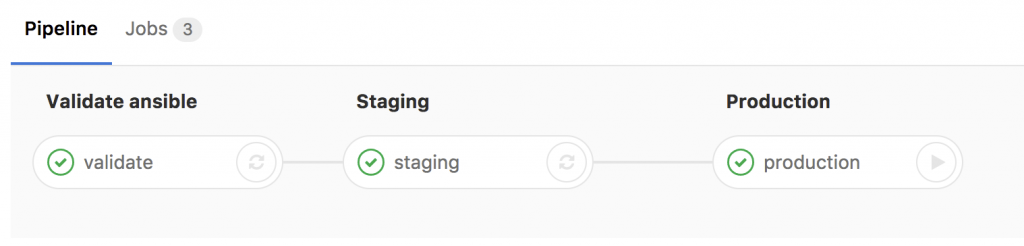

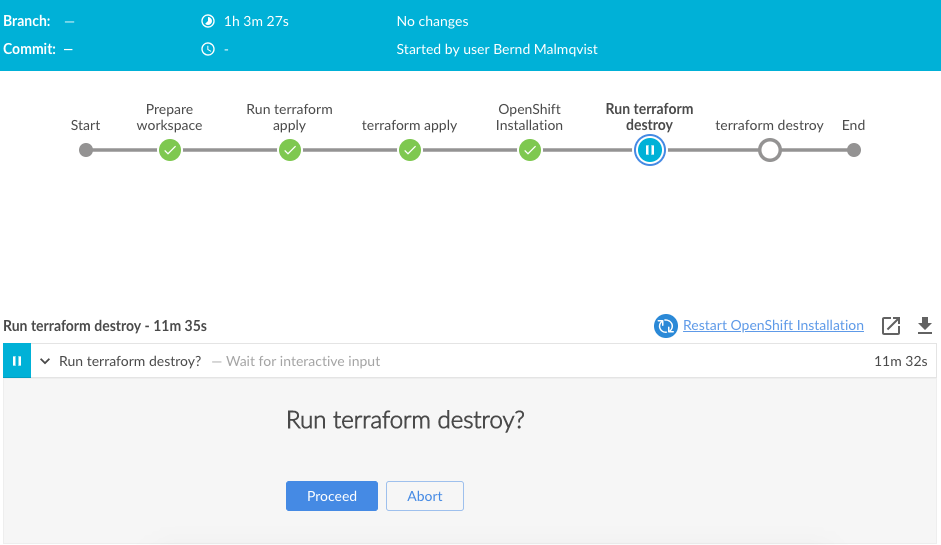

Once you are finished with your OpenShift testing you can go back into Jenkins pipeline and commit to destroy the environment again:

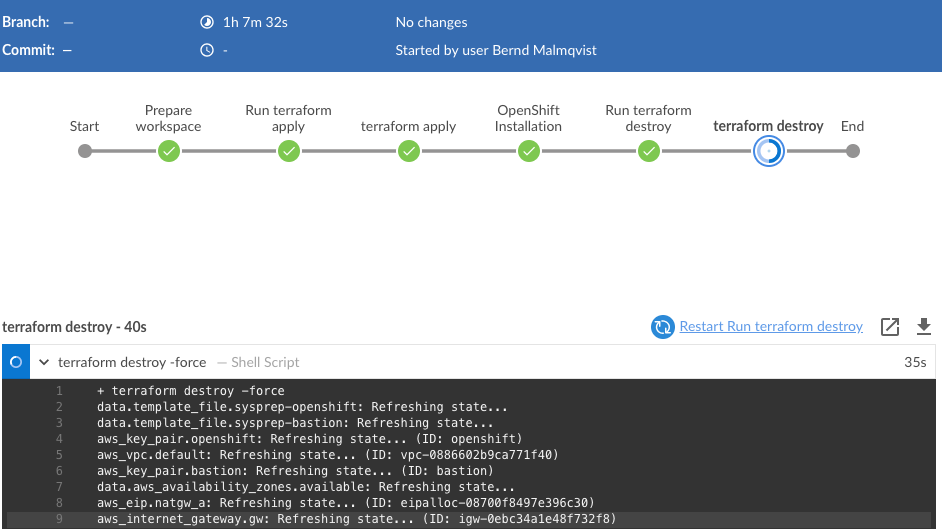

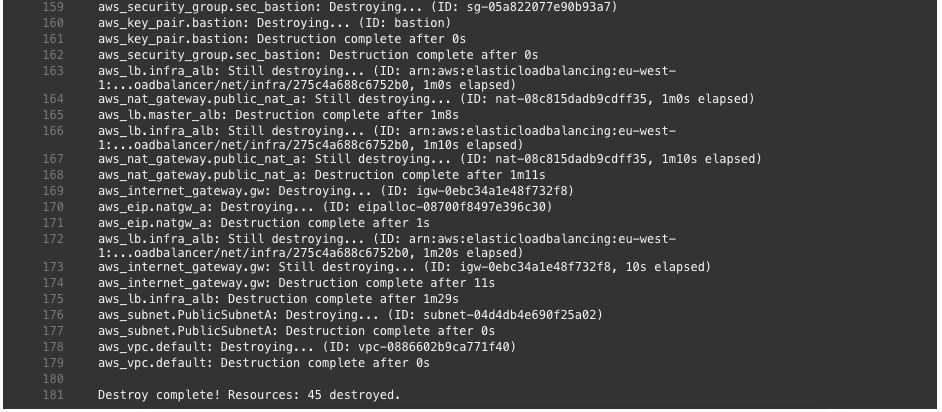

Running terraform destroy:

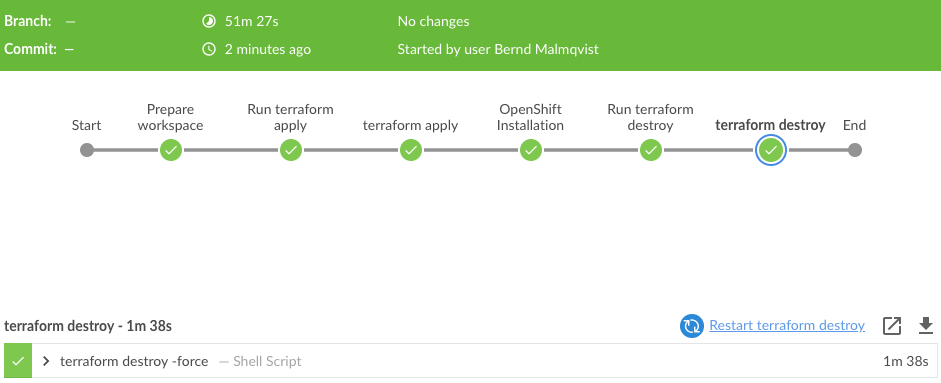

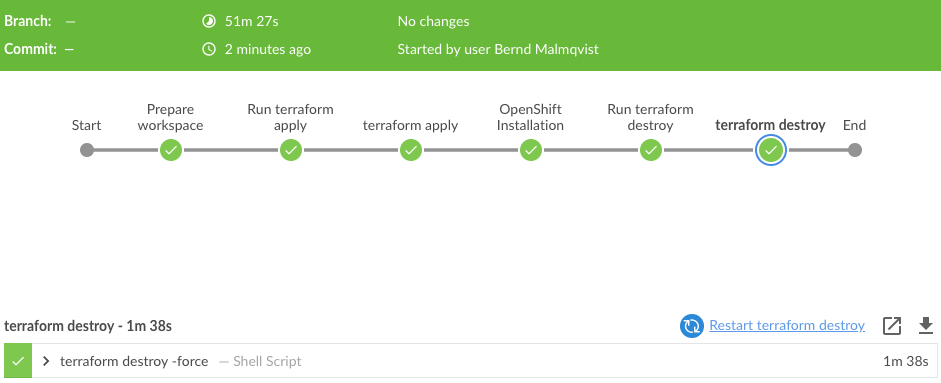

The pipeline completed successfully:

I hope this was in interesting post and let me know if you like it and want to see more of these. I am planning some improvements to integrate a validation step in the pipeline, to create a project and build, and deploy container on OpenShift automatically.

Please share your feedback and leave a comment.