I have found AWS EKS introduction on the HashiCorp learning portal and thought I’d give it a try and test the Amazon Elastic Kubernetes Services. Using cloud native container services like EKS is getting more popular and makes it easier for everyone running a Kubernetes cluster and start deploying container straight away without the overhead of maintaining and patching the control-plane and leave this to AWS.

Creating the EKS cluster is pretty easy by just running terraform apply. The only prerequisite is to have kubectl and AWS IAM authenticator installed. You find the terraform files on my repository https://github.com/berndonline/aws-eks-terraform

# Initializing and create EKS cluster terraform init terraform apply # Generate kubeconfig and configmap for adding worker nodes terraform output kubeconfig > ./kubeconfig terraform output config_map_aws_auth > ./config_map_aws_auth.yaml # Apply configmap for worker nodes to join the cluster export KUBECONFIG=./kubeconfig kubectl apply -f ./config_map_aws_auth.yaml kubectl get nodes --watch

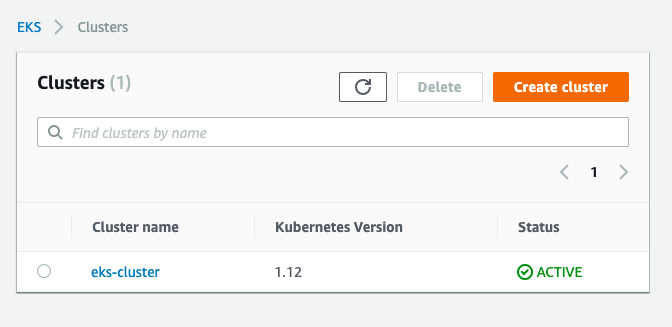

Let’s have a look at the AWS EKS console:

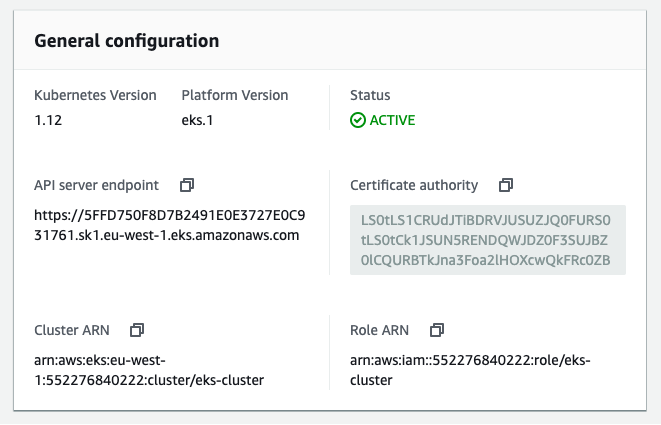

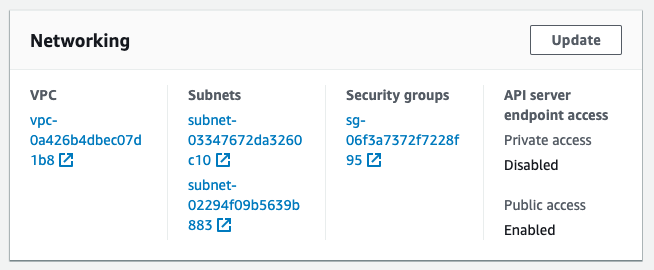

In the cluster details you see general information:

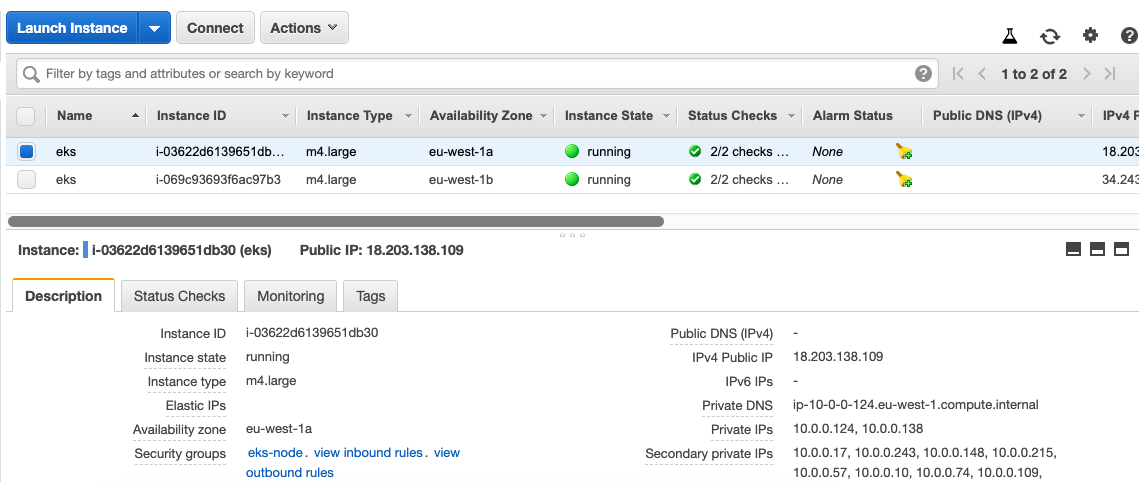

On the EC2 side you see two worker nodes as defined:

Now we can deploy an example application:

$ kubectl create -f example/hello-kubernetes.yml service/hello-kubernetes created deployment.apps/hello-kubernetes created ingress.extensions/hello-ingress created

Checking that the pods are running and the correct resources are created:

$ kubectl get all NAME READY STATUS RESTARTS AGE pod/hello-kubernetes-b75555c67-4fhfn 1/1 Running 0 1m pod/hello-kubernetes-b75555c67-pzmlw 1/1 Running 0 1m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/hello-kubernetes LoadBalancer 172.20.108.223 ac1dc1ab84e5c11e9ab7e0211179d9b9-394134490.eu-west-1.elb.amazonaws.com 80:32043/TCP 1m service/kubernetes ClusterIP 172.20.0.1 443/TCP 26m NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deployment.apps/hello-kubernetes 2 2 2 2 1m NAME DESIRED CURRENT READY AGE replicaset.apps/hello-kubernetes-b75555c67 2 2 2 1m

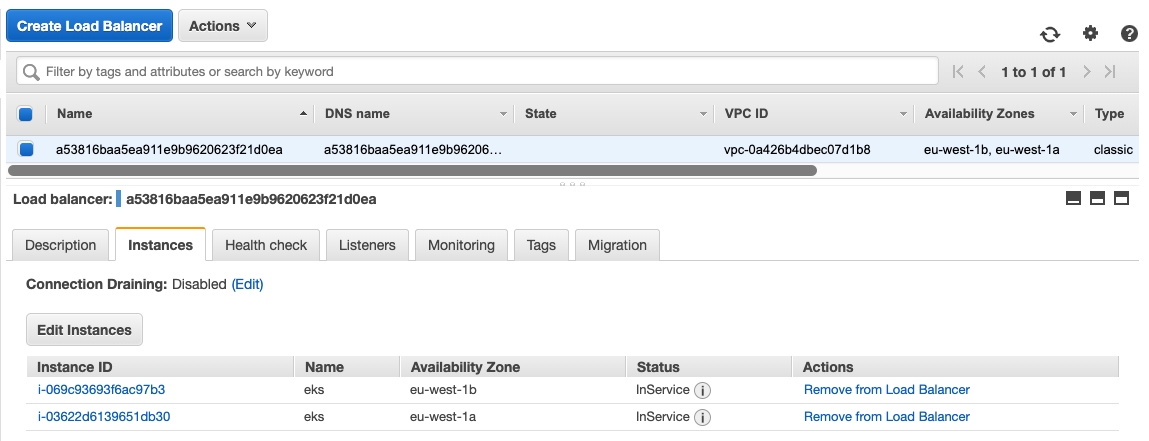

With the ingress service the EKS cluster is automatically creating an ELB load balancer and forward traffic to the two worker nodes:

Example application:

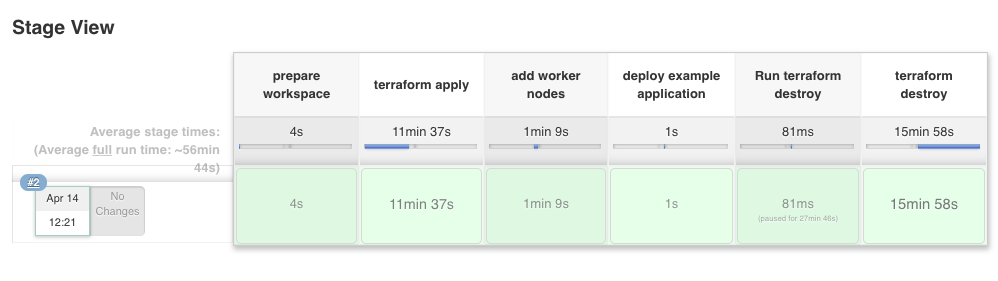

I have used a very simple Jenkins pipeline to create the AWS EKS cluster:

pipeline {

agent any

environment {

AWS_ACCESS_KEY_ID = credentials('AWS_ACCESS_KEY_ID')

AWS_SECRET_ACCESS_KEY = credentials('AWS_SECRET_ACCESS_KEY')

}

stages {

stage('prepare workspace') {

steps {

sh 'rm -rf *'

git branch: 'master', url: 'https://github.com/berndonline/aws-eks-terraform.git'

sh 'terraform init'

}

}

stage('terraform apply') {

steps {

sh 'terraform apply -auto-approve'

sh 'terraform output kubeconfig > ./kubeconfig'

sh 'terraform output config_map_aws_auth > ./config_map_aws_auth.yaml'

sh 'export KUBECONFIG=./kubeconfig'

}

}

stage('add worker nodes') {

steps {

sh 'kubectl apply -f ./config_map_aws_auth.yaml --kubeconfig=./kubeconfig'

sh 'sleep 60'

}

}

stage('deploy example application') {

steps {

sh 'kubectl apply -f ./example/hello-kubernetes.yml --kubeconfig=./kubeconfig'

sh 'kubectl get all --kubeconfig=./kubeconfig'

}

}

stage('Run terraform destroy') {

steps {

input 'Run terraform destroy?'

}

}

stage('terraform destroy') {

steps {

sh 'kubectl delete -f ./example/hello-kubernetes.yml --kubeconfig=./kubeconfig'

sh 'sleep 60'

sh 'terraform destroy -force'

}

}

}

}

I really like how easy and quick it is to create an AWS EKS cluster in less than 15 mins.

Thanks. I have tried various git repositories to get a complete EKS cluster with worker nodes and a working application. It has taken days and I have not succeeded. I got your example up and working in a few hours