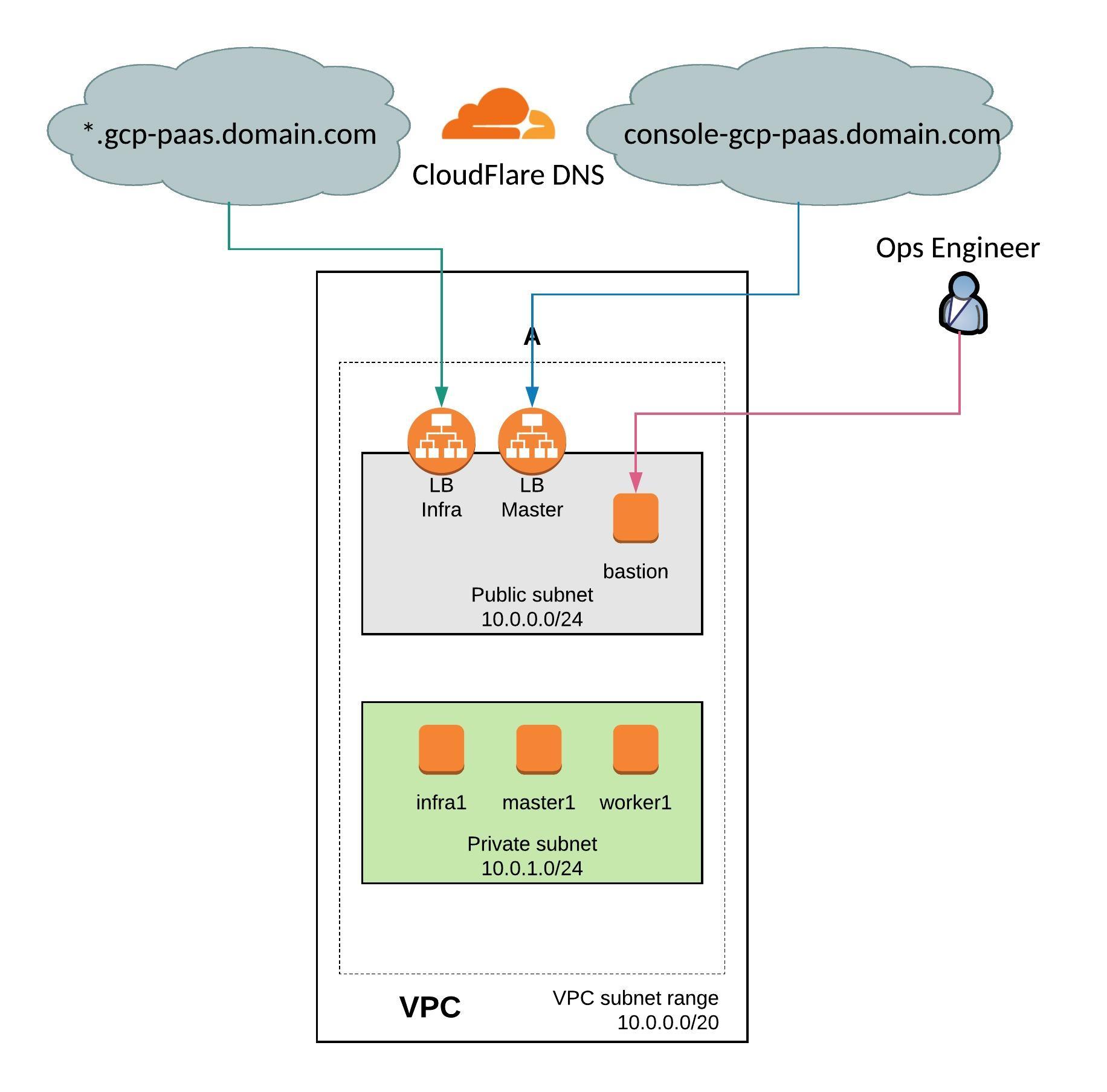

Over the past few days I have converted the OpenShift 3.11 infrastructure on Amazon AWS to run on Google Cloud Platform. I have kept the similar VPC network layout and instances to run OpenShift.

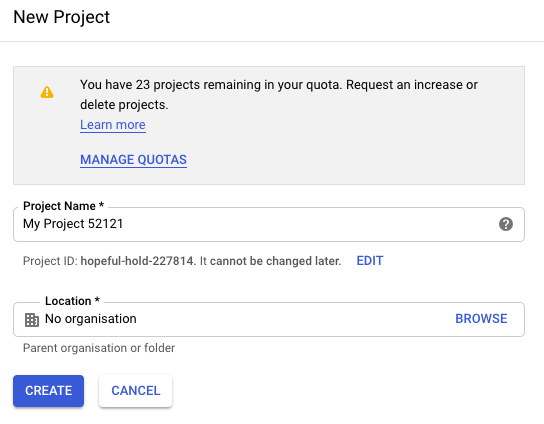

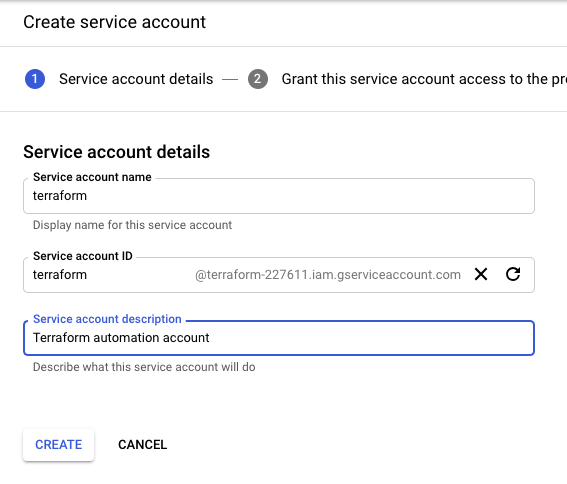

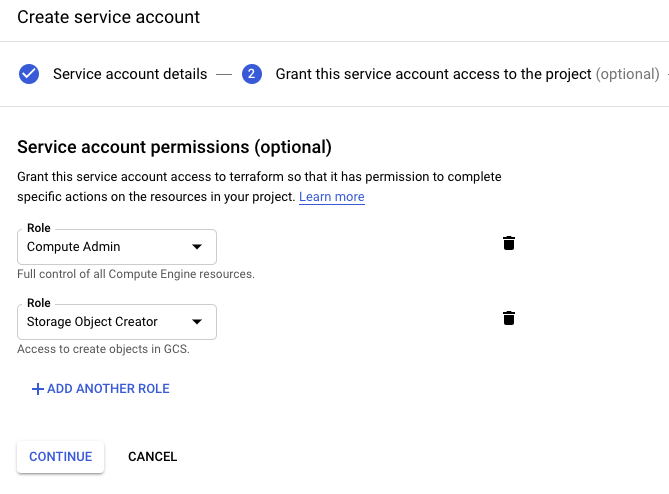

Before you start you need to create a project on Google Cloud Platform, then continue to create the service account and generate the private key and download the credential as JSON file.

Create the new project:

Create the service account:

Give the service account compute admin and storage object creator permissions:

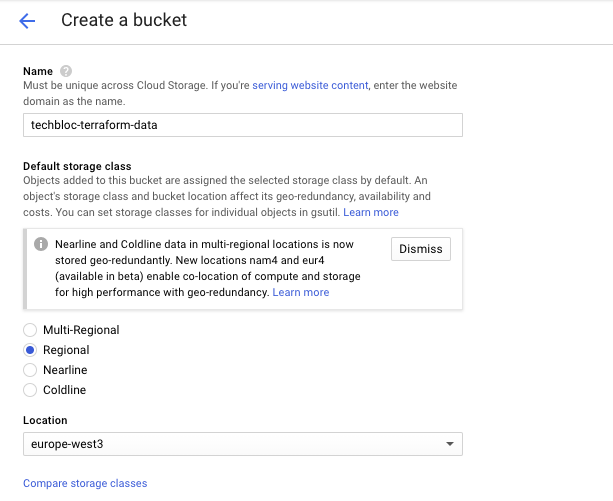

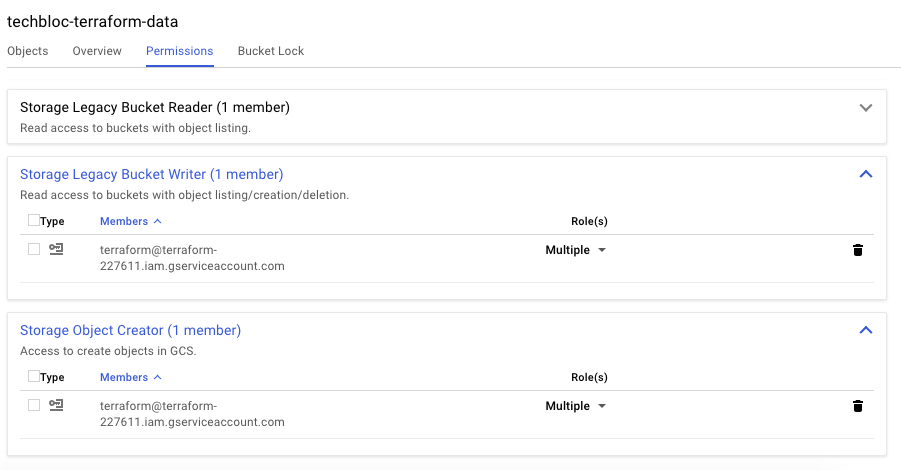

Then create a storage bucket for the Terraform backend state and assign the correct bucket permission to the terraform service account:

Bucket permissions:

To start, clone my openshift-terraform github repository and checkout the google-dev branch:

git clone https://github.com/berndonline/openshift-terraform.git cd ./openshift-terraform/ && git checkout google-dev

Add your previously downloaded credentials json file:

cat << EOF > ./credentials.json

{

"type": "service_account",

"project_id": "<--your-project-->",

"private_key_id": "<--your-key-id-->",

"private_key": "-----BEGIN PRIVATE KEY-----

...

}

EOF

There are a few things you need to modify in the main.tf and variables.tf before you can start:

...

terraform {

backend "gcs" {

bucket = "<--your-bucket-name-->"

prefix = "openshift-311"

credentials = "credentials.json"

}

}

...

...

variable "gcp_region" {

description = "Google Compute Platform region to launch servers."

default = "europe-west3"

}

variable "gcp_project" {

description = "Google Compute Platform project name."

default = "<--your-project-name-->"

}

variable "gcp_zone" {

type = "string"

default = "europe-west3-a"

description = "The zone to provision into"

}

...

Add the needed environment variables to apply changes to CloudFlare DNS:

export TF_VAR_email='<-YOUR-CLOUDFLARE-EMAIL-ADDRESS->' export TF_VAR_token='<-YOUR-CLOUDFLARE-TOKEN->' export TF_VAR_domain='<-YOUR-CLOUDFLARE-DOMAIN->' export TF_VAR_htpasswd='<-YOUR-OPENSHIFT-DEMO-USER-HTPASSWD->'

Let’s start creating the infrastructure and verify afterwards the created resources on GCP.

terraform init && terraform apply -auto-approve

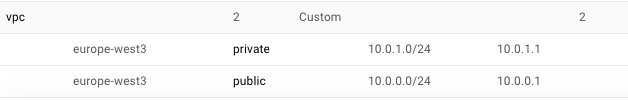

VPC and public and private subnets in region europe-west3:

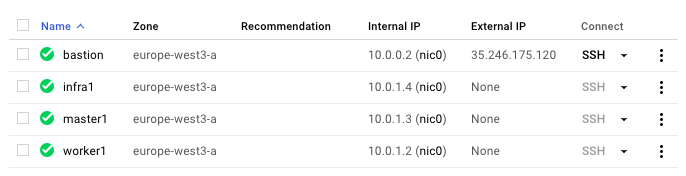

Created instances:

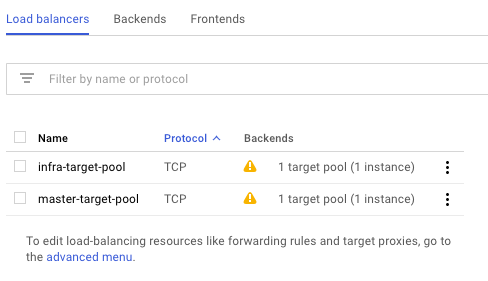

Created load balancers for master and infra nodes:

Copy the ssh key and ansible-hosts file to the bastion host from where you need to run the Ansible OpenShift playbooks.

scp -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -r ./helper_scripts/id_rsa centos@$(terraform output bastion):/home/centos/.ssh/ scp -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -r ./inventory/ansible-hosts centos@$(terraform output bastion):/home/centos/ansible-hosts

I recommend waiting a few minutes as the cloud-init script prepares the bastion host. Afterwards continue with the pre and install playbooks. You can connect to the bastion host and run the playbooks directly.

ssh -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -l centos $(terraform output bastion) -A "cd /openshift-ansible/ && ansible-playbook ./playbooks/openshift-pre.yml -i ~/ansible-hosts" ssh -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -l centos $(terraform output bastion) -A "cd /openshift-ansible/ && ansible-playbook ./playbooks/openshift-install.yml -i ~/ansible-hosts"

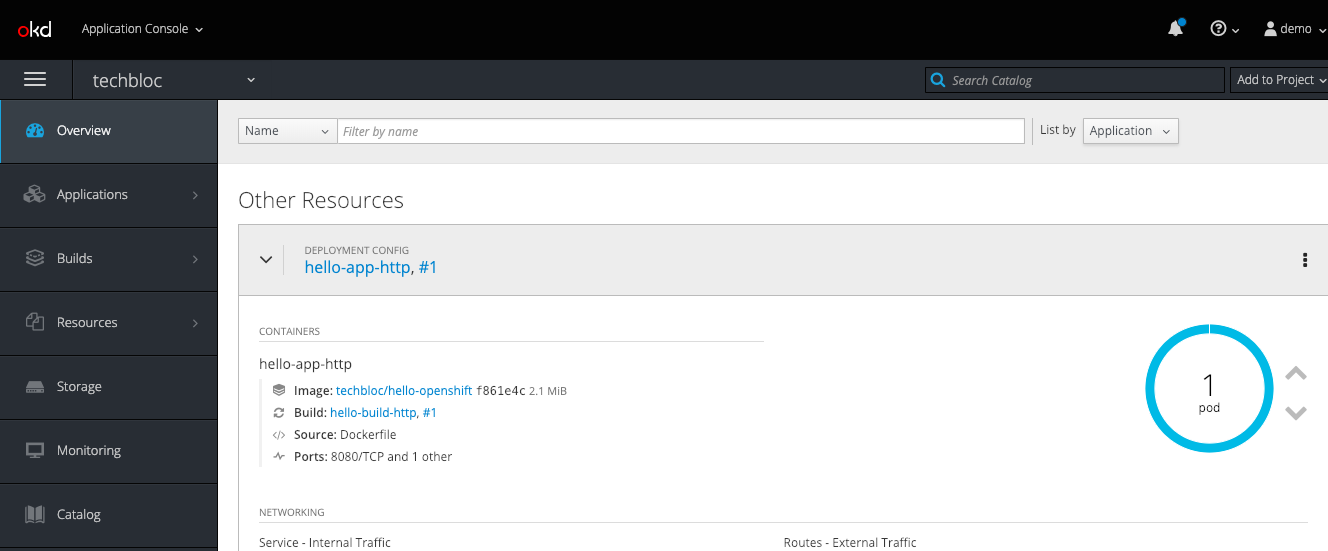

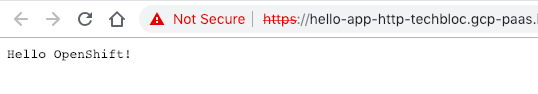

After the installation is completed, continue to create your project and applications:

When you are finished with the testing, run terraform destroy.

terraform destroy -force

Please share your feedback and leave a comment.

Getting errors related to GCP permission when run “terraform init && terraform apply -auto-approve”:

Error: Error applying plan:

5 errors occurred:

* google_compute_address.master: 1 error occurred:

* google_compute_address.master: Error creating Address: googleapi: Error 403: Required ‘compute.addresses.create’ permission for ‘projects/opneshift-paas/regions/us-east1/addresses/master-address’, forbidden

* google_compute_http_health_check.infra: 1 error occurred:

* google_compute_http_health_check.infra: Error creating HttpHealthCheck: googleapi: Error 403: Required ‘compute.httpHealthChecks.create’ permission for ‘projects/opneshift-paas/global/httpHealthChecks/infra-basic-check’, forbidden

* google_compute_http_health_check.master: 1 error occurred:

* google_compute_http_health_check.master: Error creating HttpHealthCheck: googleapi: Error 403: Required ‘compute.httpHealthChecks.create’ permission for ‘projects/opneshift-paas/global/httpHealthChecks/master-basic-check’, forbidden

* google_compute_address.infra: 1 error occurred:

* google_compute_address.infra: Error creating Address: googleapi: Error 403: Required ‘compute.addresses.create’ permission for ‘projects/opneshift-paas/regions/us-east1/addresses/infra-address’, forbidden

* google_compute_network.vpc: 1 error occurred:

* google_compute_network.vpc: Error creating Network: googleapi: Error 403: Required ‘compute.networks.create’ permission for ‘projects/opneshift-paas/global/networks/vpc’, forbidden

Seems like your google account has not enough permission to create the infrastructure. Check if you have Compute Admin and Storage Object creator roles assigned.

It appears you need to add the following to the ‘helper_scripts/ansible-hosts.template.txt’ for GCE:

[OSEv3:vars]

…

openshift_cloudprovider_kind=gce

This repairs the bastion terraform output being empty and enables the storage provisioners as well as a number of other GCE specific components.