This post is about continuous integration and continuous delivery (CICD) for Cisco devices and how to use network simulation to test automation before deploying this to production environments. That was one of the main reasons for me to use Vagrant for simulating the network because the virtual environment can be created on-demand and thrown away after the scripts run successful. Please read before my post about Cisco network simulation using Vagrant: Cisco IOSv and XE network simulation using Vagrant and Cisco ASAv network simulation using Vagrant.

Same like in my first post about Continuous Integration and Delivery for Networking with Cumulus Linux, I am using Gitlab.com and their Gitlab-runner for the continuous integration and delivery (CICD) pipeline.

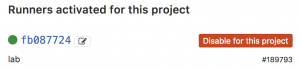

- You need to register your Gitlab-runner with the Gitlab repository:

- The next step is to create your .gitlab-ci.yml which defines your CI-pipeline.

---

stages:

- validate ansible

- staging iosv

- staging iosxe

validate:

stage: validate ansible

script:

- bash ./linter.sh

staging_iosv:

before_script:

- git clone https://github.com/berndonline/cisco-lab-vagrant.git

- cd cisco-lab-vagrant/

- cp Vagrantfile-IOSv Vagrantfile

stage: staging iosv

script:

- bash ../staging.sh

staging_iosxe:

before_script:

- git clone https://github.com/berndonline/cisco-lab-vagrant.git

- cd cisco-lab-vagrant/

- cp Vagrantfile-IOSXE Vagrantfile

stage: staging iosxe

script:

- bash ../staging.sh

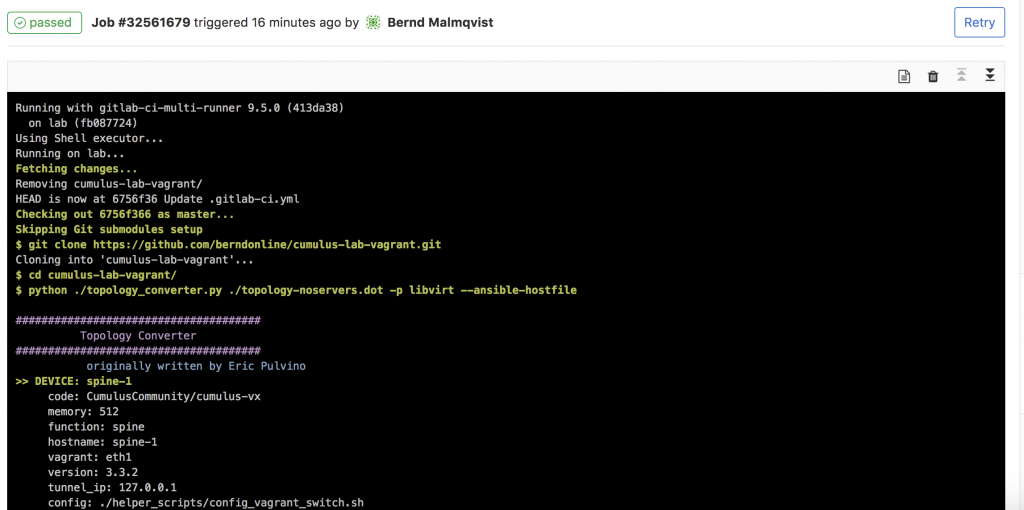

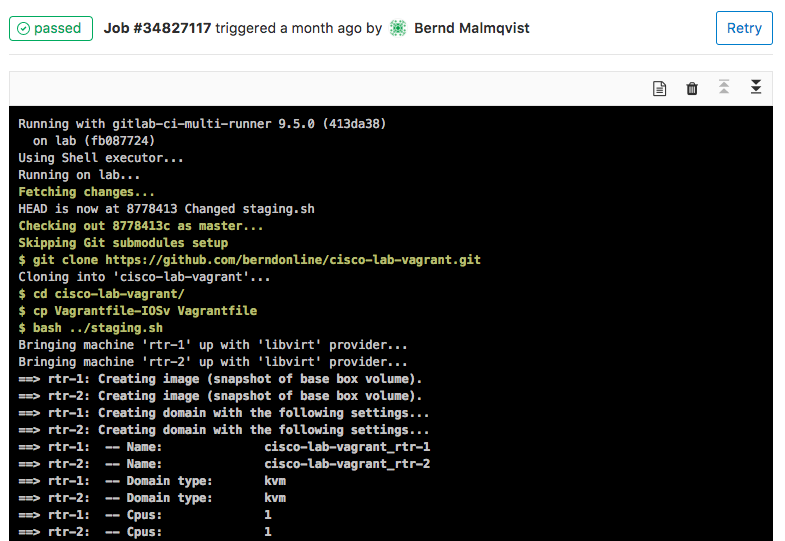

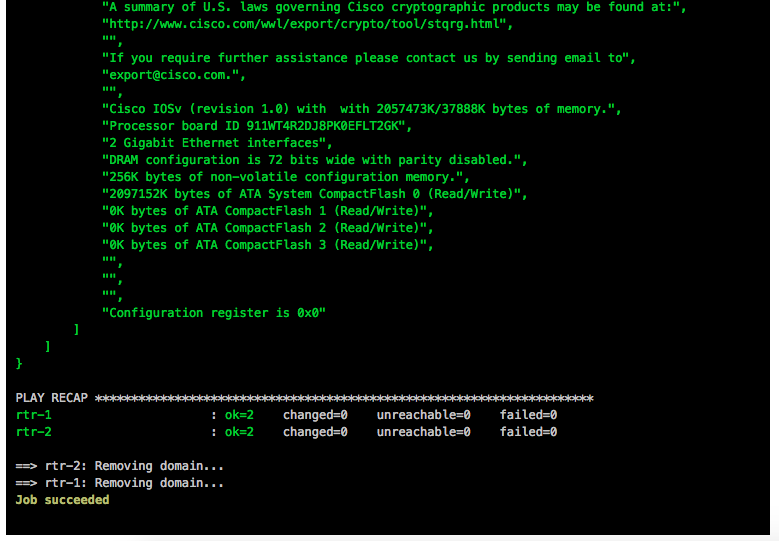

I clone the cisco vagrant lab which I use to spin-up a virtual staging environment and run the Ansible playbook against the virtual lab. The stages IOSv and IOSXE are just examples in my case depending what Cisco IOS versions you want to test.

The production stage would be similar to staging only that you run the Ansible playbook against your physical environment.

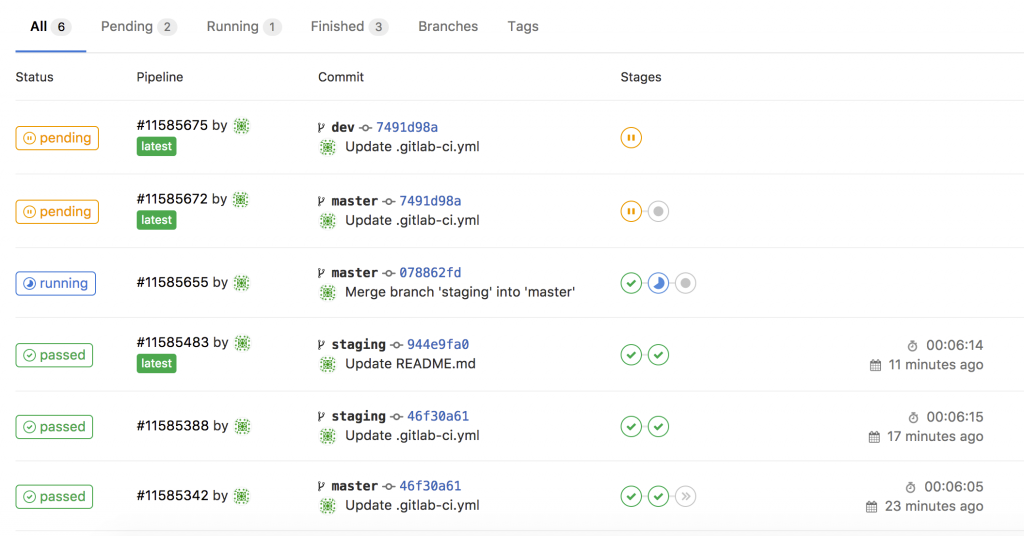

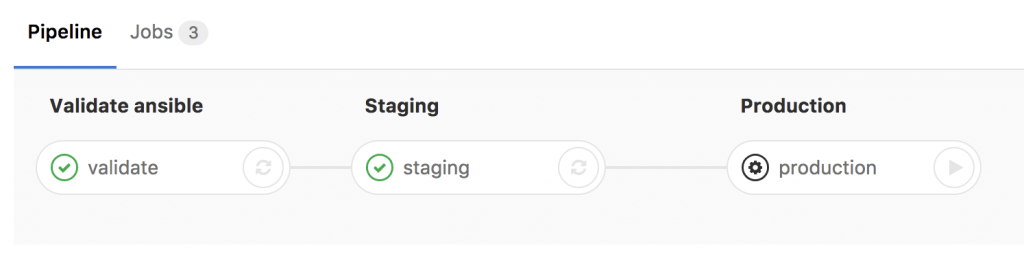

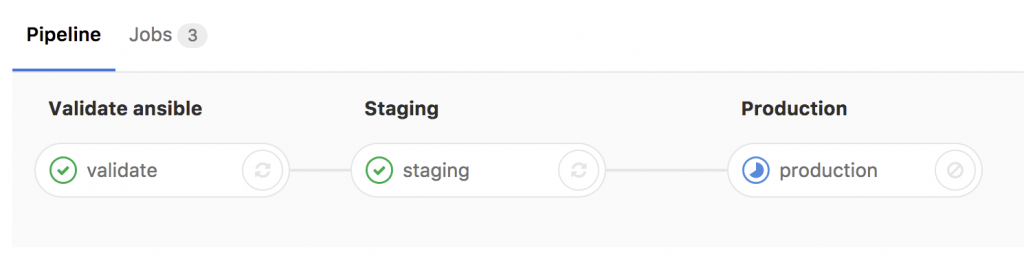

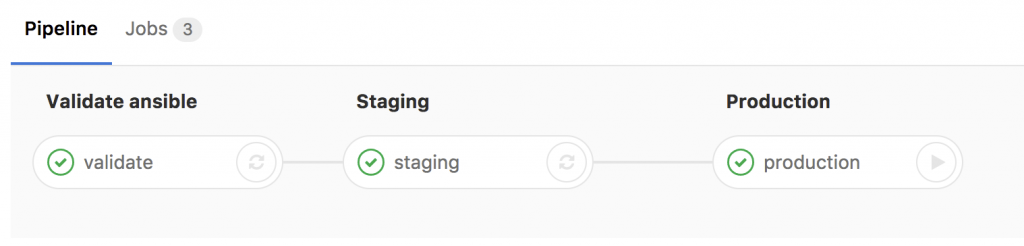

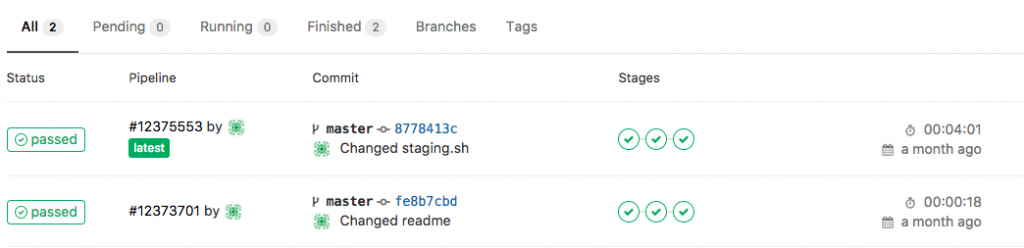

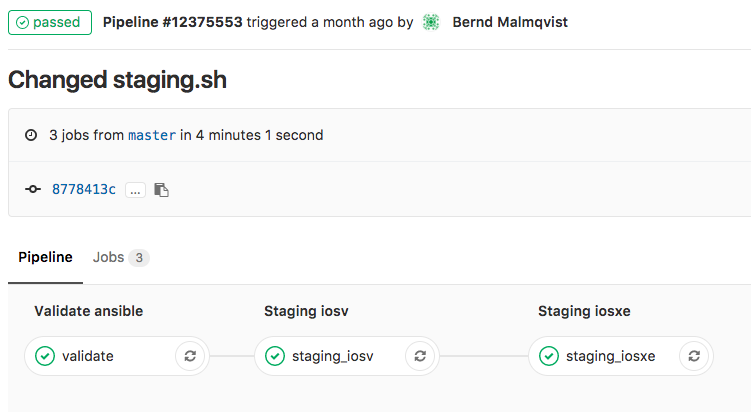

- Basically any commit or merge in the Gitlab repo triggers the pipeline which is defined in the gitlab-ci.

- The first stage is only to validate that the YAML files have the correct syntax.

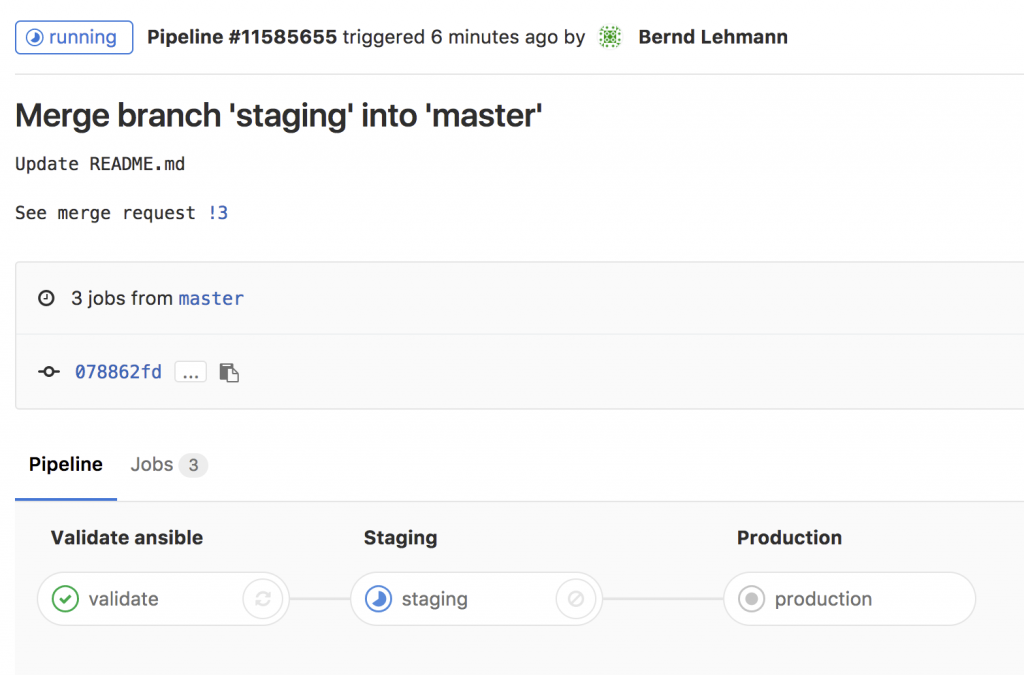

- Here the details of a job and when everything goes well the job succeeded.

This is an easy way to test your Ansible playbooks against a virtual Cisco environment before deploying this to a production system.

Here again my two repositories I use:

https://github.com/berndonline/cisco-lab-vagrant

https://github.com/berndonline/cisco-lab-provision

Read my new posts about Ansible Playbook for Cisco ASAv Firewall Topology or Ansible Playbook for Cisco BGP Routing Topology.