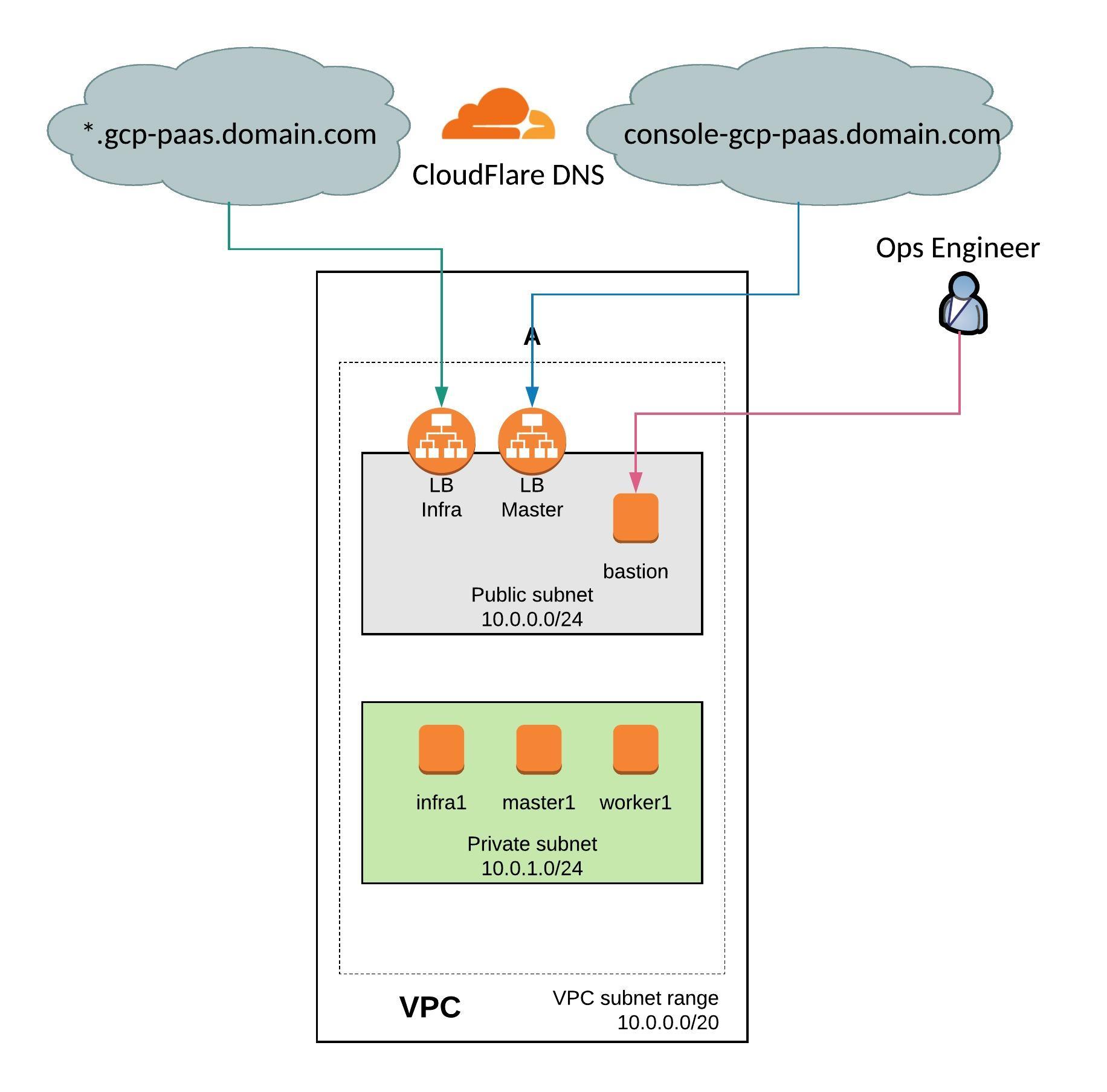

Over the past few days I have converted the OpenShift 3.11 infrastructure on Amazon AWS to run on Google Cloud Platform. I have kept the similar VPC network layout and instances to run OpenShift.

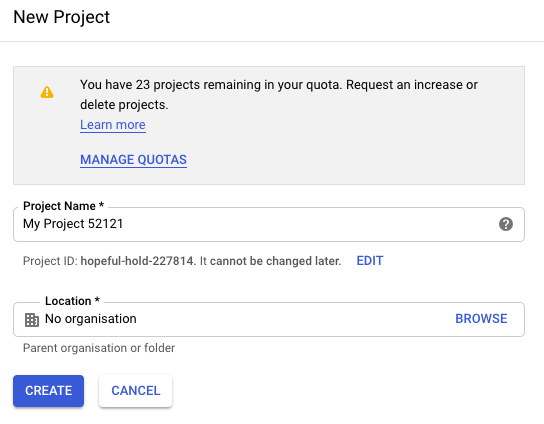

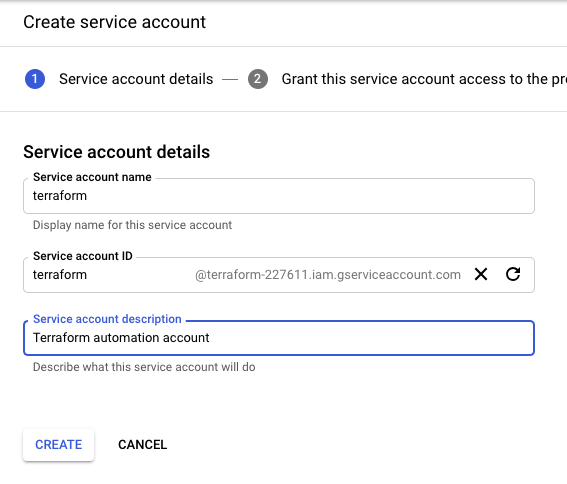

Before you start you need to create a project on Google Cloud Platform, then continue to create the service account and generate the private key and download the credential as JSON file.

Create the new project:

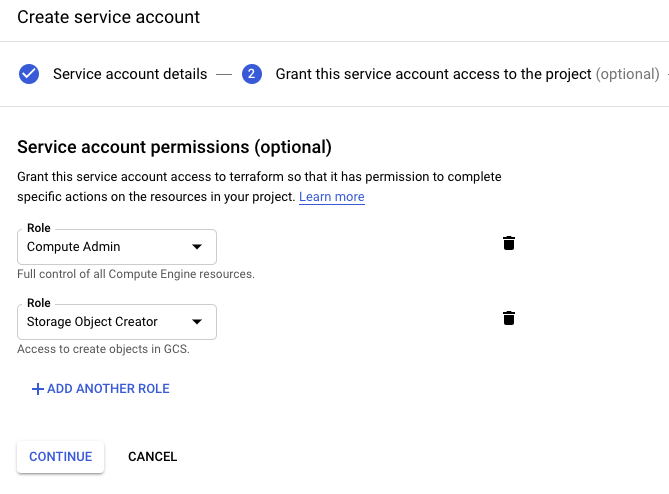

Create the service account:

Give the service account compute admin and storage object creator permissions:

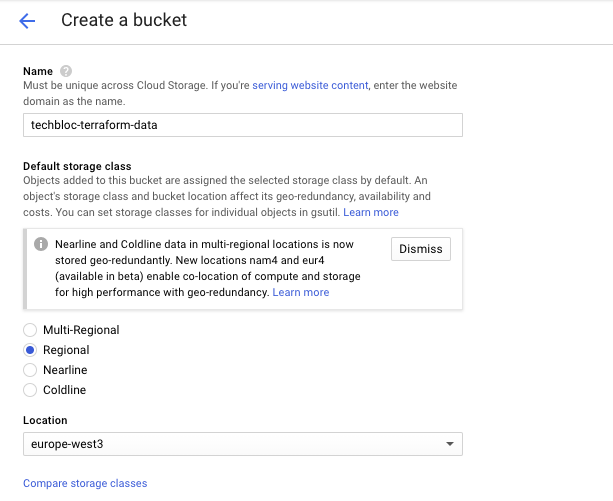

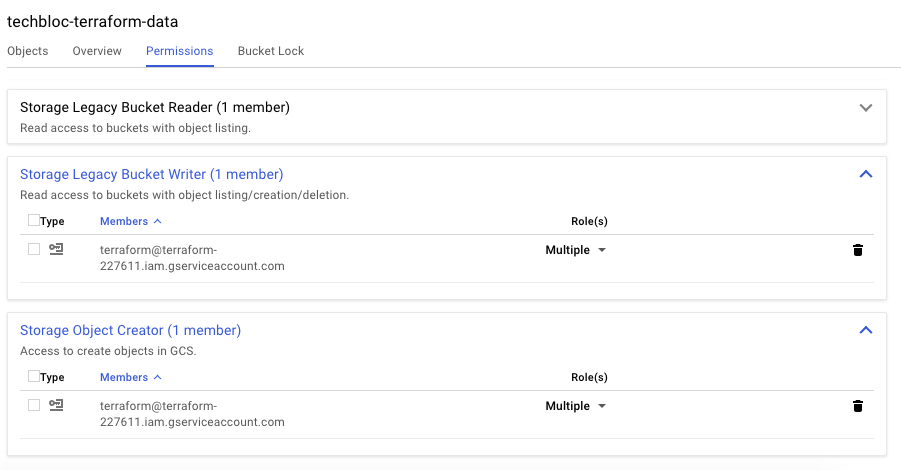

Then create a storage bucket for the Terraform backend state and assign the correct bucket permission to the terraform service account:

Bucket permissions:

To start, clone my openshift-terraform github repository and checkout the google-dev branch:

git clone https://github.com/berndonline/openshift-terraform.git cd ./openshift-terraform/ && git checkout google-dev

Add your previously downloaded credentials json file:

cat << EOF > ./credentials.json

{

"type": "service_account",

"project_id": "<--your-project-->",

"private_key_id": "<--your-key-id-->",

"private_key": "-----BEGIN PRIVATE KEY-----

...

}

EOF

There are a few things you need to modify in the main.tf and variables.tf before you can start:

...

terraform {

backend "gcs" {

bucket = "<--your-bucket-name-->"

prefix = "openshift-311"

credentials = "credentials.json"

}

}

...

...

variable "gcp_region" {

description = "Google Compute Platform region to launch servers."

default = "europe-west3"

}

variable "gcp_project" {

description = "Google Compute Platform project name."

default = "<--your-project-name-->"

}

variable "gcp_zone" {

type = "string"

default = "europe-west3-a"

description = "The zone to provision into"

}

...

Add the needed environment variables to apply changes to CloudFlare DNS:

export TF_VAR_email='<-YOUR-CLOUDFLARE-EMAIL-ADDRESS->' export TF_VAR_token='<-YOUR-CLOUDFLARE-TOKEN->' export TF_VAR_domain='<-YOUR-CLOUDFLARE-DOMAIN->' export TF_VAR_htpasswd='<-YOUR-OPENSHIFT-DEMO-USER-HTPASSWD->'

Let’s start creating the infrastructure and verify afterwards the created resources on GCP.

terraform init && terraform apply -auto-approve

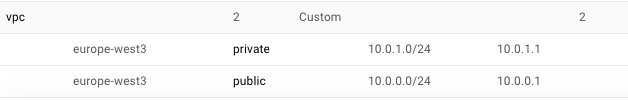

VPC and public and private subnets in region europe-west3:

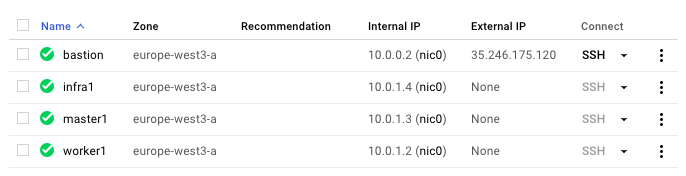

Created instances:

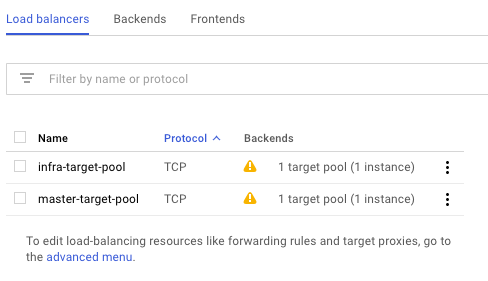

Created load balancers for master and infra nodes:

Copy the ssh key and ansible-hosts file to the bastion host from where you need to run the Ansible OpenShift playbooks.

scp -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -r ./helper_scripts/id_rsa centos@$(terraform output bastion):/home/centos/.ssh/ scp -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -r ./inventory/ansible-hosts centos@$(terraform output bastion):/home/centos/ansible-hosts

I recommend waiting a few minutes as the cloud-init script prepares the bastion host. Afterwards continue with the pre and install playbooks. You can connect to the bastion host and run the playbooks directly.

ssh -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -l centos $(terraform output bastion) -A "cd /openshift-ansible/ && ansible-playbook ./playbooks/openshift-pre.yml -i ~/ansible-hosts" ssh -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -l centos $(terraform output bastion) -A "cd /openshift-ansible/ && ansible-playbook ./playbooks/openshift-install.yml -i ~/ansible-hosts"

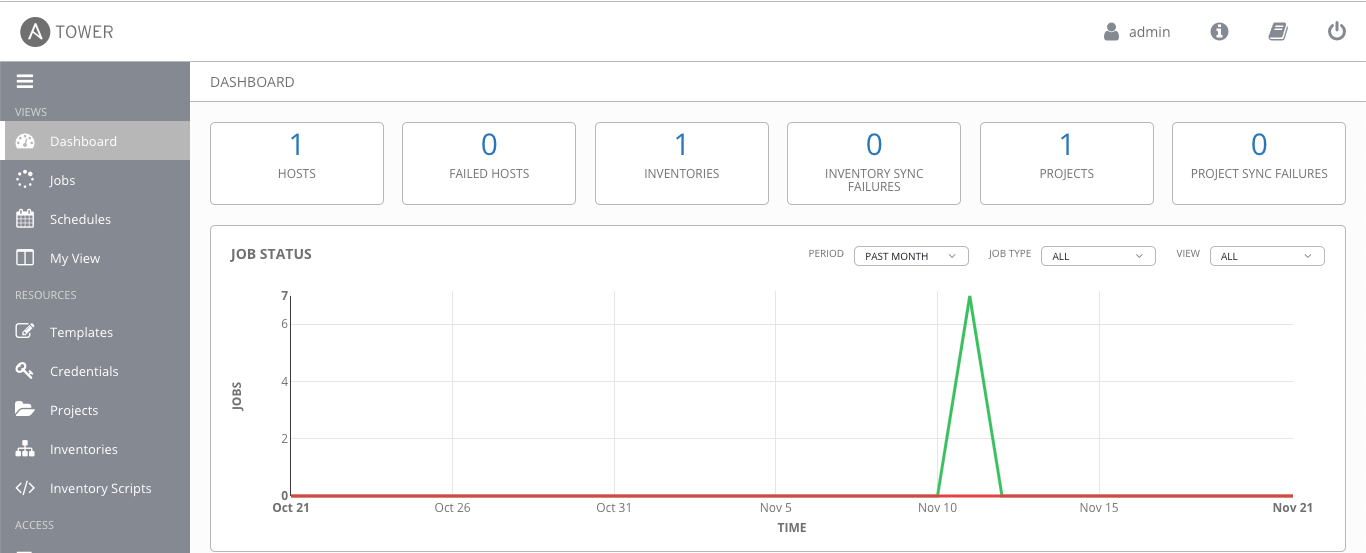

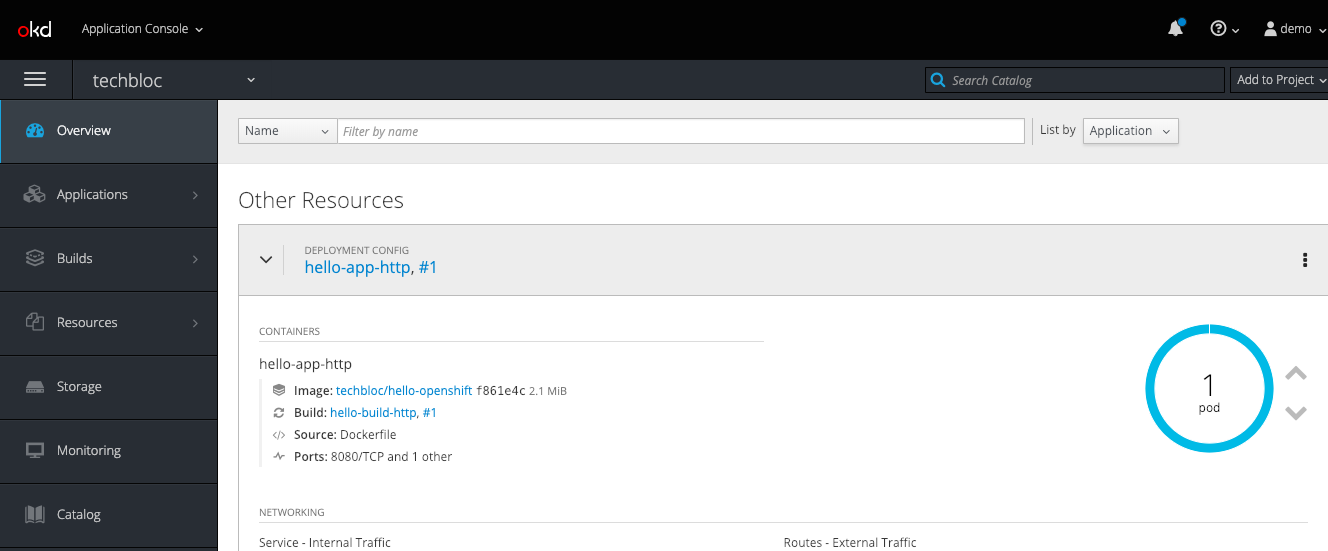

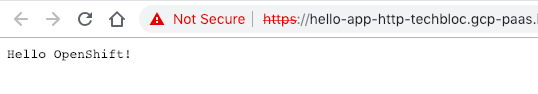

After the installation is completed, continue to create your project and applications:

When you are finished with the testing, run terraform destroy.

terraform destroy -force

Please share your feedback and leave a comment.