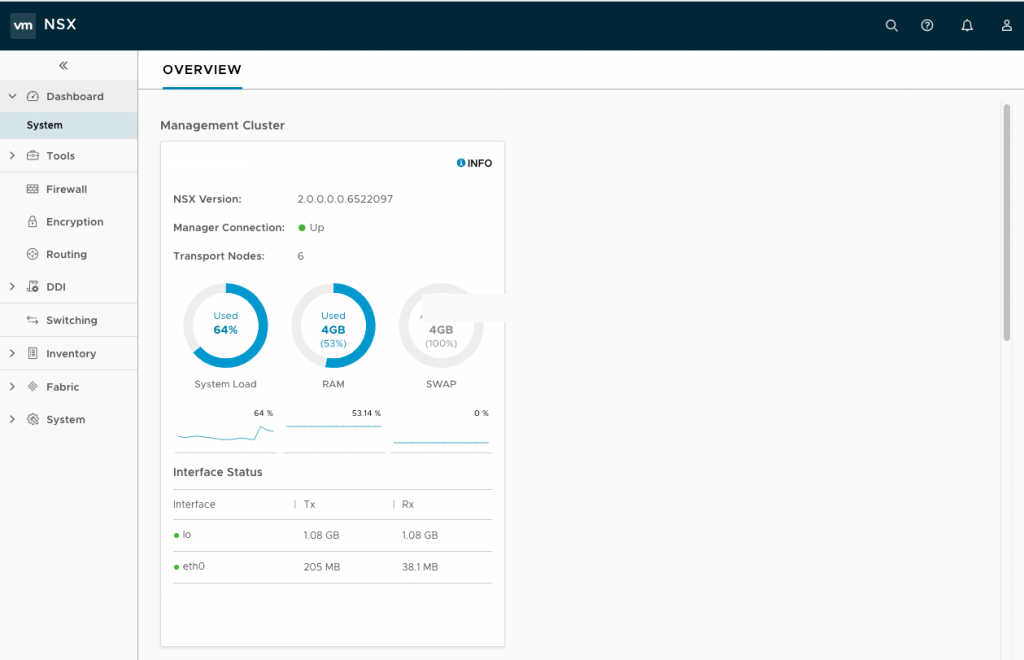

Over the past two days I spend some time with VMware NSX-T 2.0 which has multi-hypervisor (KVM and ESXi) support, and is for containerised platform environments like Kubernetes and RedHat OpenShift. VMware has as well an NSX-T cloud version which can run in Amazon AWS and Google cloud services.

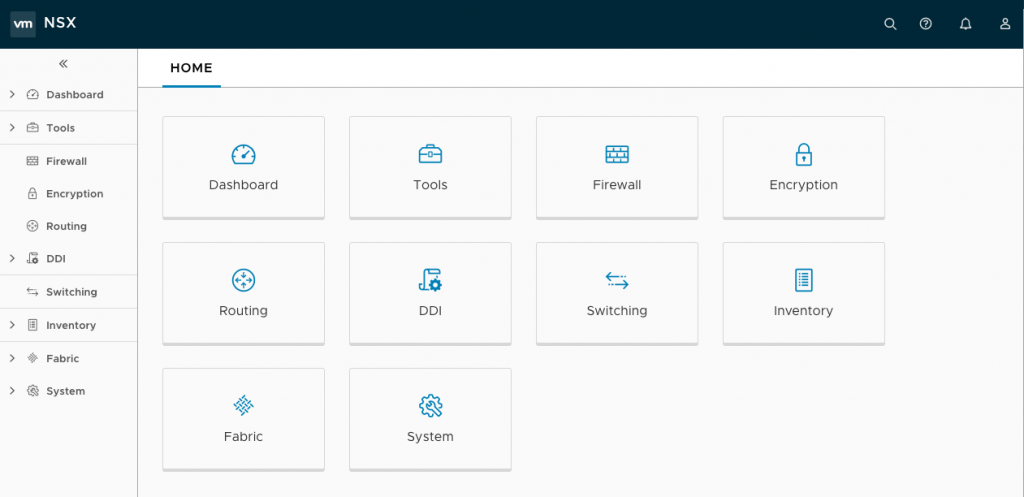

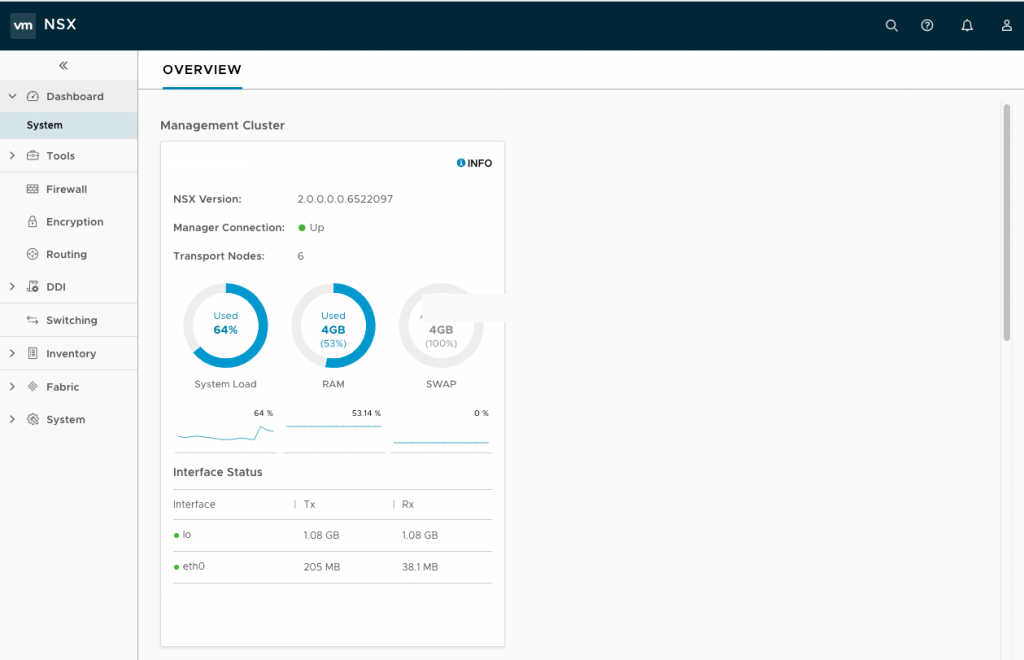

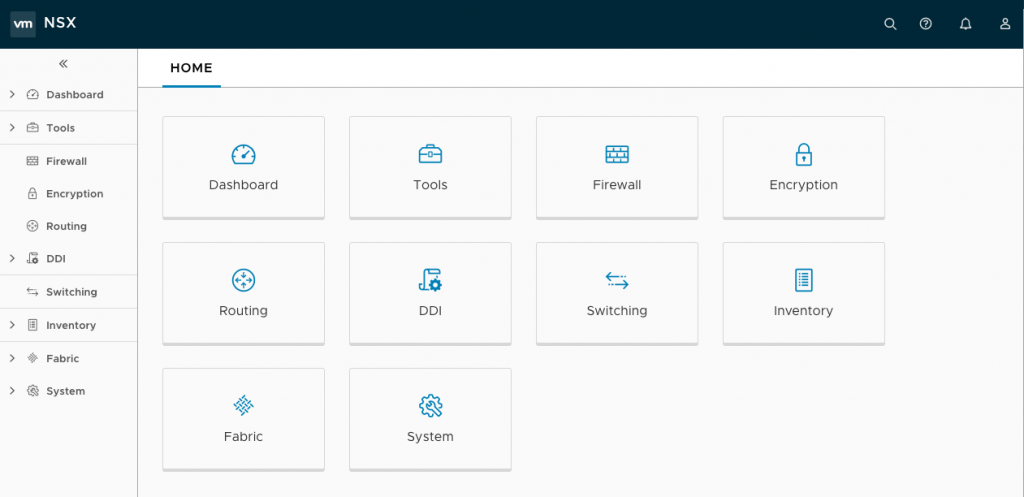

First big change is the new HTML5 web client which looks nice and clean, the menu structure is different to NSX-V for vSphere which you have to get used to first. NSX-V will also get the new HTML5 web clients soon I have heard:

VMware did quite a few changes in NSX-T, they moved over to Geneve and replaced the VXLAN encapsulation which is currently used in NSX-V. That makes it impossible at the moment to connect NSX-V and NSX-T because of the different overlay technologies.

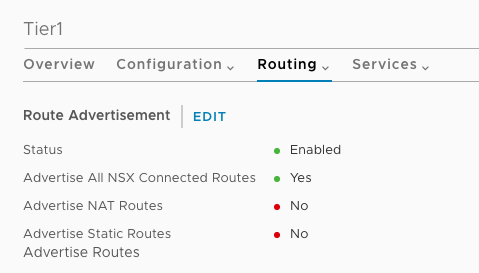

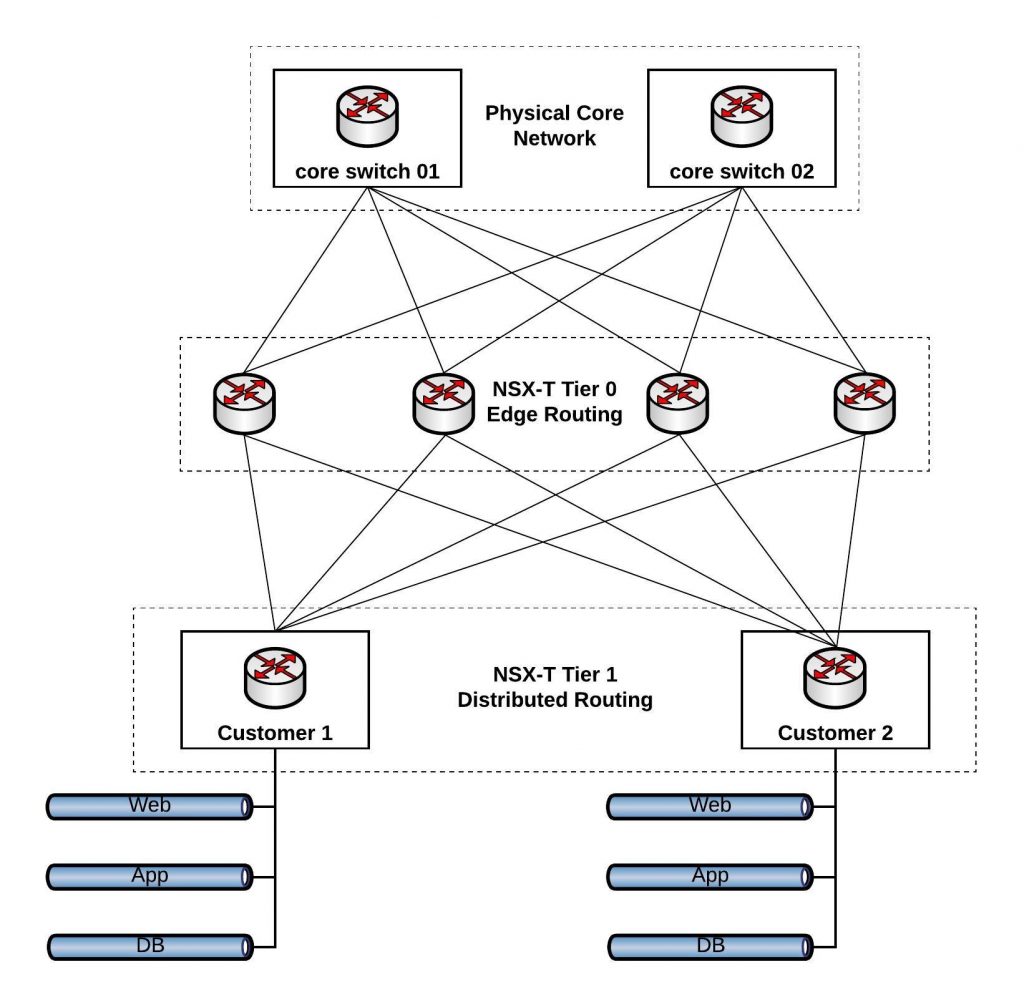

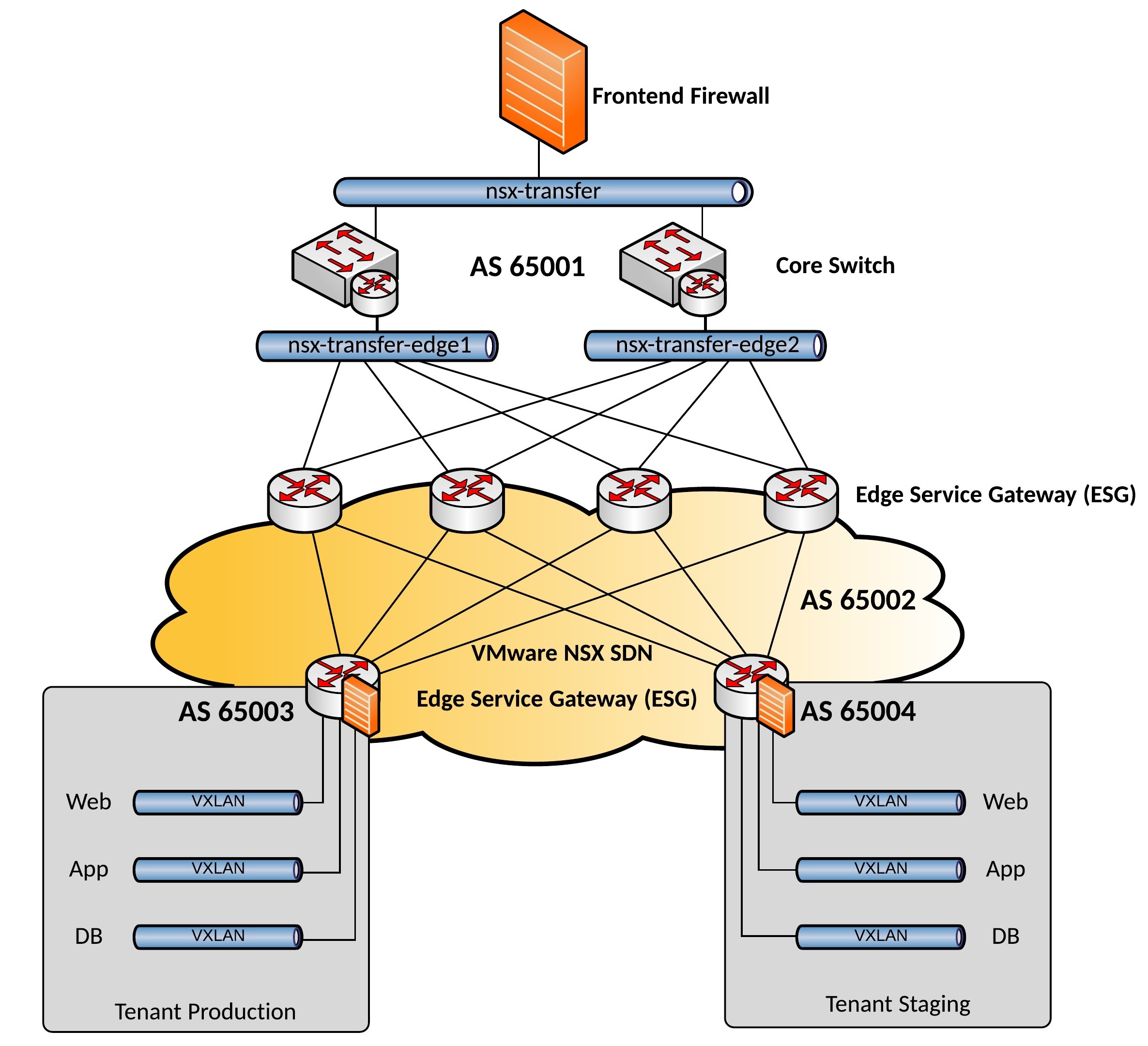

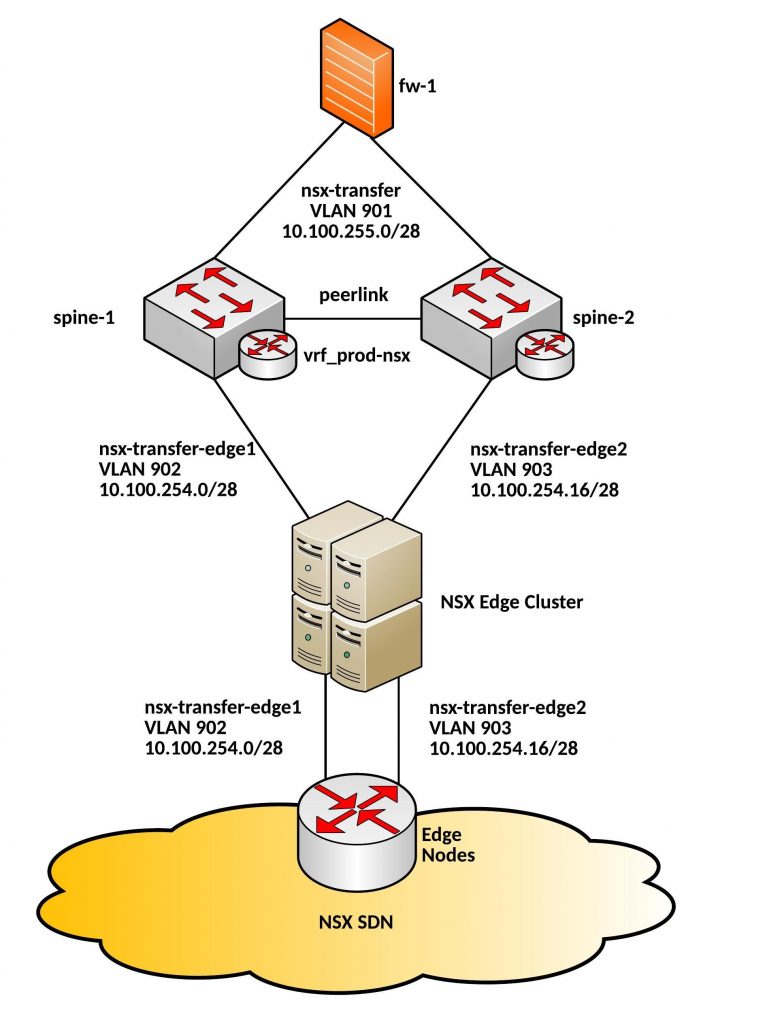

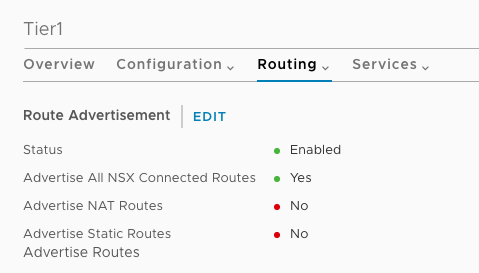

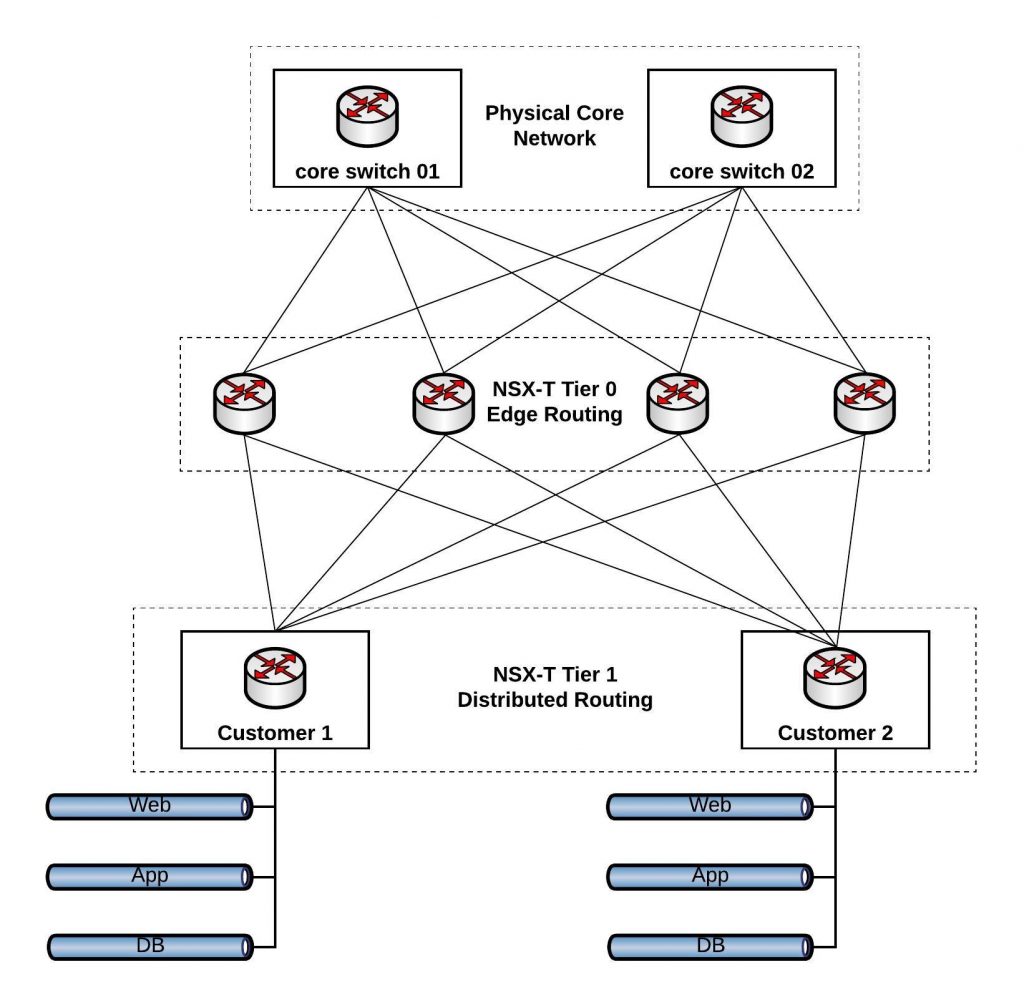

Routing works different to the previous NSX for vSphere version having Tier 0 (edge/aggregation) and Tier 1 (tenant) routers. Previously in NSX-V you used Edge appliances as tenant router which is now replace with Tier 1 distributed routing. On the Tier 1 tenant router you don’t need to configure BGP anymore, you just specify to advertise connected routes, the connection between Tier 1 and Tier 0 also pushed down the default gateway.

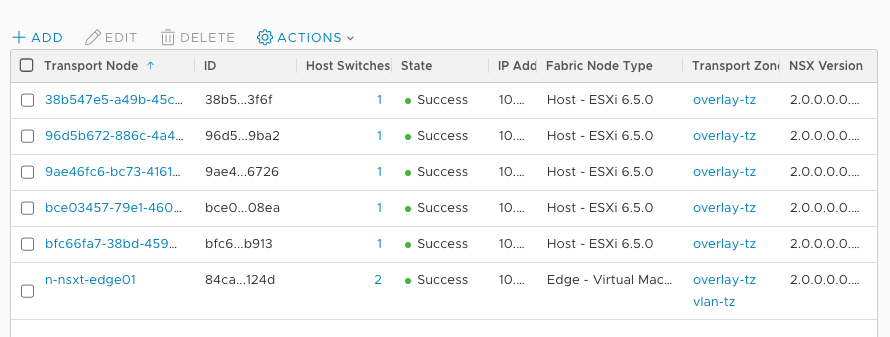

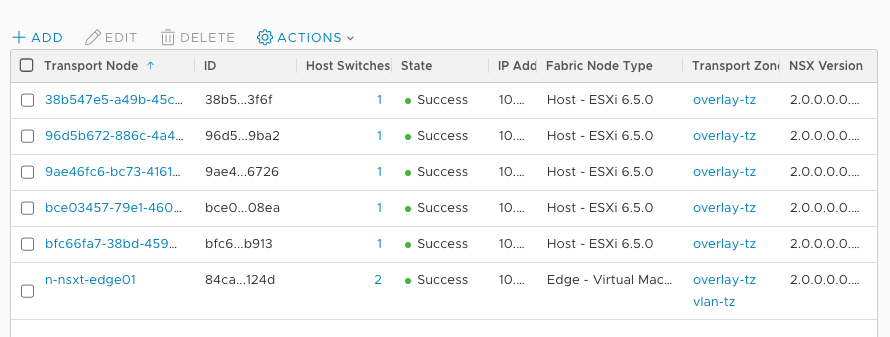

The Edge appliance can be deployed as virtual machine or on Bare-Metal servers which makes the Transport Zoning different to NSX-V because Edge appliances need to be part of Transport Zones to connect to the overlay and physical VLAN:

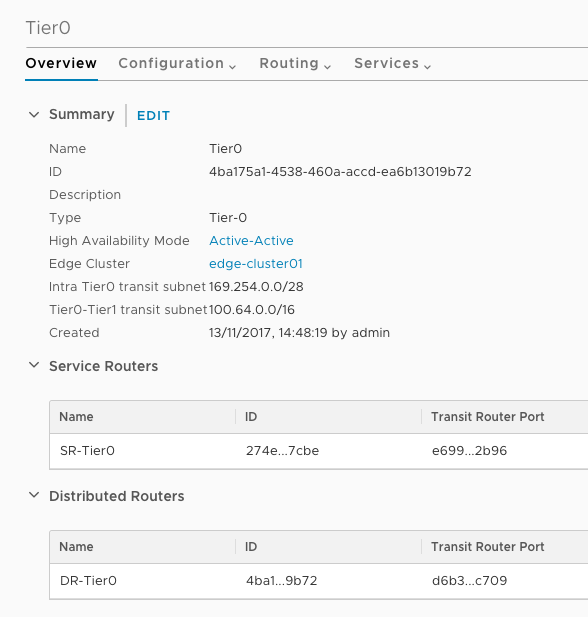

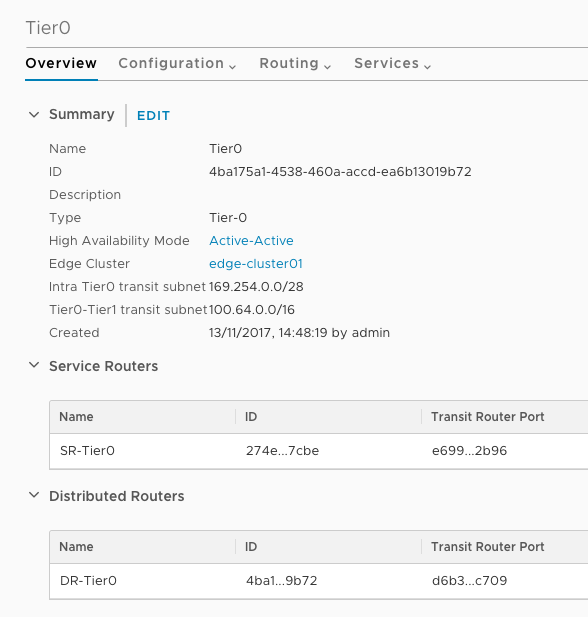

On the Edge itself you have two functions, Distributed Routing (DR) for stateless forwarding and Service Routing (SR) for stateful forwarding like NAT:

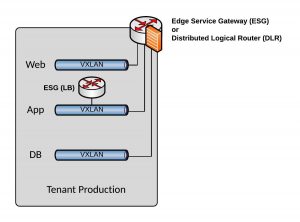

Load balancing is currently missing in the Edge appliance but this is coming in one of the next releases for NSX-T.

Here a network design with Tier 0 and Tier 1 routing in NSX-T:

I will write another post in the coming weeks about the detailed routing configuration in NSX-T. I am also curious to integrate Kubernetes in NSX-T to try out the integration for containerise platform environments.