I recently deployed VMware NSX (Software defined Network) in our datacentre.

About the NSX Edge cluster there are some some specific requirements when it comes to physical connectivity. All the information you find as well in the VMware NSX reference design guide.

On Cumulus Linux side I am using BGP in Quagga and the traffic is distributed via ECMP (Equal-cost multi-path) over multiple Edge nodes within NSX.

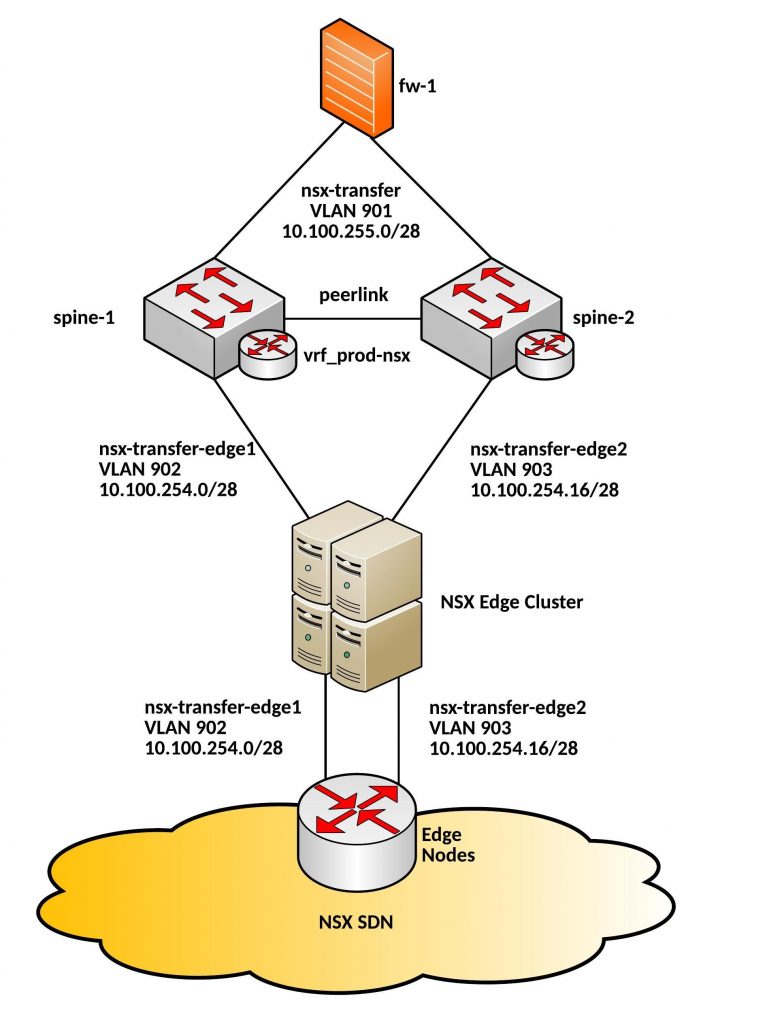

See below the overview:

Very important to have an dedicated VLAN per core switch to the Edge Nodes. In my tests it didn’t work with a shared VLAN via the Cumulus core, the BGP neighbor relationships were correctly established but there was a problem with the packet forwarding via the Peerlink.

Here the example Quagga BGP config from spine-1:

router bgp 65001 vrf vrf-nsx neighbor 10.100.254.1 remote-as 65002 neighbor 10.100.254.1 password verystrongpassword!! neighbor 10.100.254.1 timers 1 3 neighbor 10.100.254.2 remote-as 65002 neighbor 10.100.254.2 password verystrongpassword!! neighbor 10.100.254.2 timers 1 3 neighbor 10.100.254.3 remote-as 65002 neighbor 10.100.254.3 password verystrongpassword!! neighbor 10.100.254.3 timers 1 3 neighbor 10.100.254.4 remote-as 65002 neighbor 10.100.254.4 password verystrongpassword!! neighbor 10.100.254.4 timers 1 3 neighbor 10.100.255.2 remote-as 65001 neighbor 10.100.255.2 password verystrongpassword!! address-family ipv4 unicast network 0.0.0.0/0 neighbor 10.100.254.1 route-map bgp-in in neighbor 10.100.254.2 route-map bgp-in in neighbor 10.100.254.3 route-map bgp-in in neighbor 10.100.254.4 route-map bgp-in in neighbor 10.100.255.2 next-hop-self neighbor 10.100.255.2 route-map bgp-in in exit-address-family ip route 0.0.0.0/0 10.100.255.14 vrf vrf_prod-nsx access-list bgp-in permit 10.100.0.0/17 route-map bgp-in permit 10 match ip address bgp-in

The second core switch, spine-2 looks exactly the same only different IP addresses are used.

More about my experience with VMware NSX will follow soon.