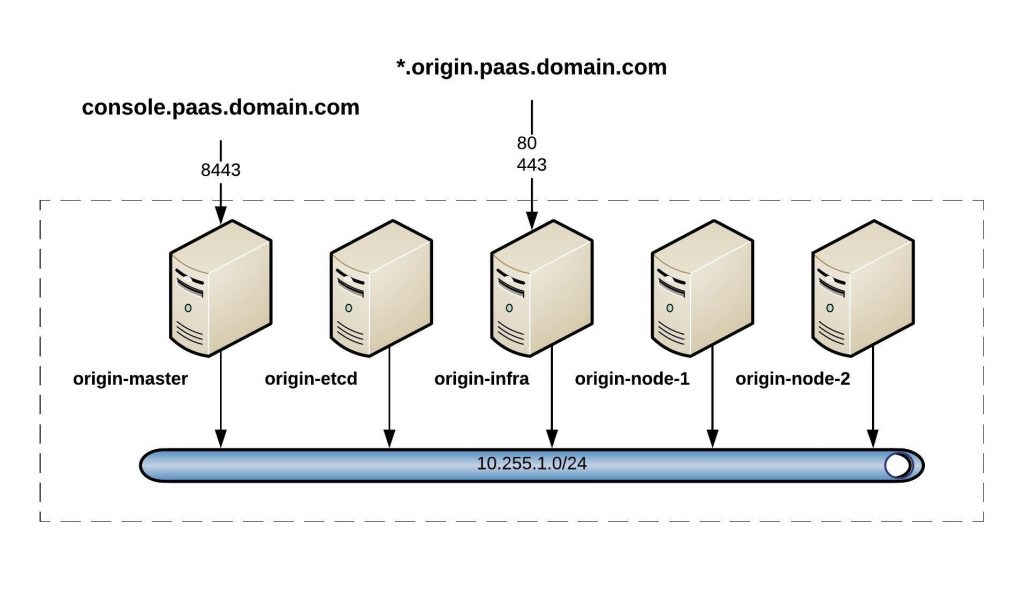

This here is just a simple example deploying a web application like WordPress on an OpenShift cluster.

I am doing this via the command line because it is much quicker but you can also do this via the WebUI. First, we need to log in:

[vagrant@origin-master ~]$ oc login https://console.paas.domain.com:8443

The server is using a certificate that does not match its hostname: x509: certificate is not valid for any names, but wanted to match console.paas.domain.com

You can bypass the certificate check, but any data you send to the server could be intercepted by others.

Use insecure connections? (y/n): y

Authentication required for https://console.paas.domain.com:8443 (openshift)

Username: demo

Password:

Login successful.

You don't have any projects. You can try to create a new project, by running

oc new-project

[vagrant@origin-master ~]$

OpenShift tells us that we have no project, so let’s create a new project with the name testing:

[vagrant@origin-master ~]$ oc new-project testing

Now using project "testing" on server "https://console.paas.domain.com:8443".

You can add applications to this project with the 'new-app' command. For example, try:

oc new-app centos/ruby-22-centos7~https://github.com/openshift/ruby-ex.git

to build a new example application in Ruby.

[vagrant@origin-master ~]$

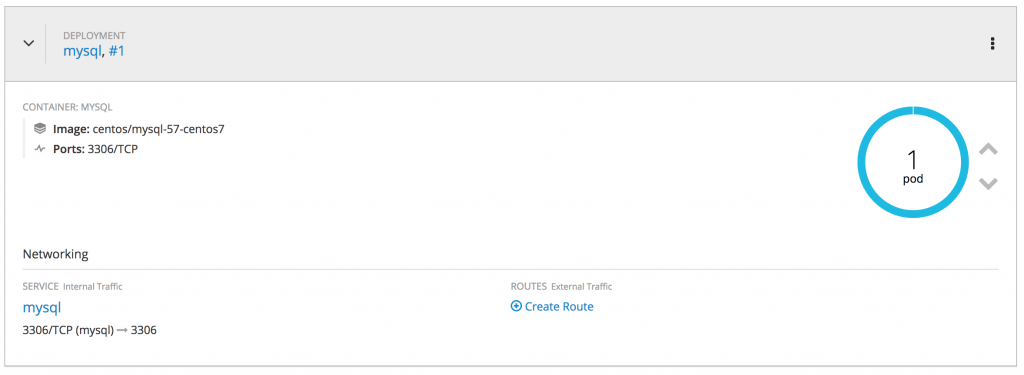

After we have created the project we need to start creating the first app, in our case for WordPress we need MySQL for the database. This is just an example because the MySQL is not using persistent storage and I use sample DB information it created:

[vagrant@origin-master ~]$ oc new-app mysql-ephemeral

--> Deploying template "openshift/mysql-ephemeral" to project testing2

MySQL (Ephemeral)

---------

MySQL database service, without persistent storage. For more information about using this template, including OpenShift considerations, see https://github.com/sclorg/mysql-container/blob/master/5.7/README.md.

WARNING: Any data stored will be lost upon pod destruction. Only use this template for testing

The following service(s) have been created in your project: mysql.

Username: user1M5

Password: 5KmiOFJ4UH2UIKbG

Database Name: sampledb

Connection URL: mysql://mysql:3306/

For more information about using this template, including OpenShift considerations, see https://github.com/sclorg/mysql-container/blob/master/5.7/README.md.

* With parameters:

* Memory Limit=512Mi

* Namespace=openshift

* Database Service Name=mysql

* MySQL Connection Username=user1M5

* MySQL Connection Password=5KmiOFJ4UH2UIKbG # generated

* MySQL root user Password=riPsYFaVEpHBYAWf # generated

* MySQL Database Name=sampledb

* Version of MySQL Image=5.7

--> Creating resources ...

secret "mysql" created

service "mysql" created

deploymentconfig "mysql" created

--> Success

Application is not exposed. You can expose services to the outside world by executing one or more of the commands below:

'oc expose svc/mysql'

Run 'oc status' to view your app.

[vagrant@origin-master ~]$

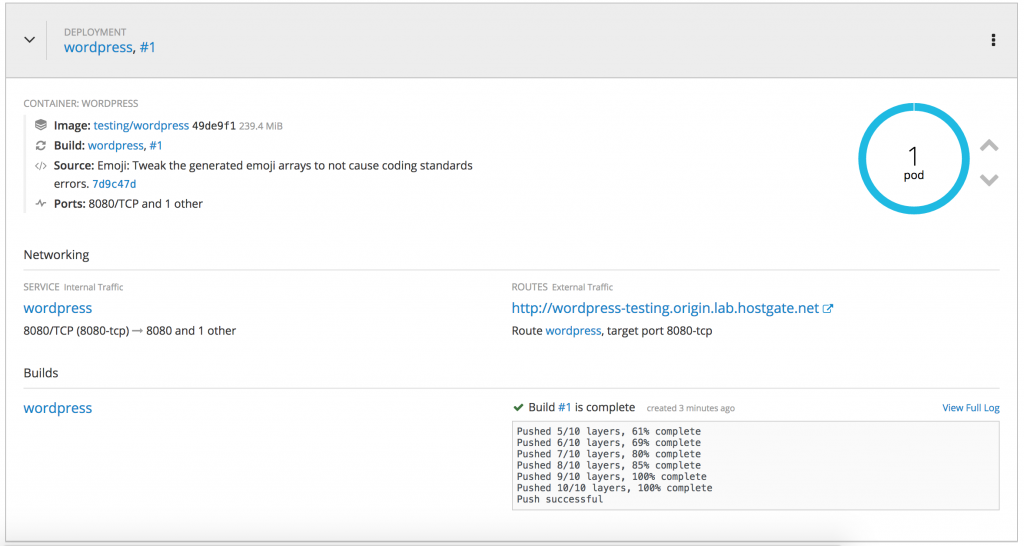

Next, let us create a PHP app and pulling the latest WordPress install from Github.

[vagrant@origin-master ~]$ oc new-app php~https://github.com/wordpress/wordpress

-->; Found image fa73ae7 (5 days old) in image stream "openshift/php" under tag "7.0" for "php"

Apache 2.4 with PHP 7.0

-----------------------

PHP 7.0 available as docker container is a base platform for building and running various PHP 7.0 applications and frameworks. PHP is an HTML-embedded scripting language. PHP attempts to make it easy for developers to write dynamically generated web pages. PHP also offers built-in database integration for several commercial and non-commercial database management systems, so writing a database-enabled webpage with PHP is fairly simple. The most common use of PHP coding is probably as a replacement for CGI scripts.

Tags: builder, php, php70, rh-php70

* A source build using source code from https://github.com/wordpress/wordpress will be created

* The resulting image will be pushed to image stream "wordpress:latest"

* Use 'start-build' to trigger a new build

* This image will be deployed in deployment config "wordpress"

* Ports 8080/tcp, 8443/tcp will be load balanced by service "wordpress"

* Other containers can access this service through the hostname "wordpress"

-->; Creating resources ...

imagestream "wordpress" created

buildconfig "wordpress" created

deploymentconfig "wordpress" created

service "wordpress" created

-->; Success

Build scheduled, use 'oc logs -f bc/wordpress' to track its progress.

Application is not exposed. You can expose services to the outside world by executing one or more of the commands below:

'oc expose svc/wordpress'

Run 'oc status' to view your app.

[vagrant@origin-master ~]$

Last but not least I need to expose the PHP WordPress app:

[vagrant@origin-master ~]$ oc expose service wordpress route "wordpress" exposed [vagrant@origin-master ~]$

Here is a short overview of how it looks in the OpenShift web console, you see the deployed MySQL pod and the PHP pod which run WordPress:

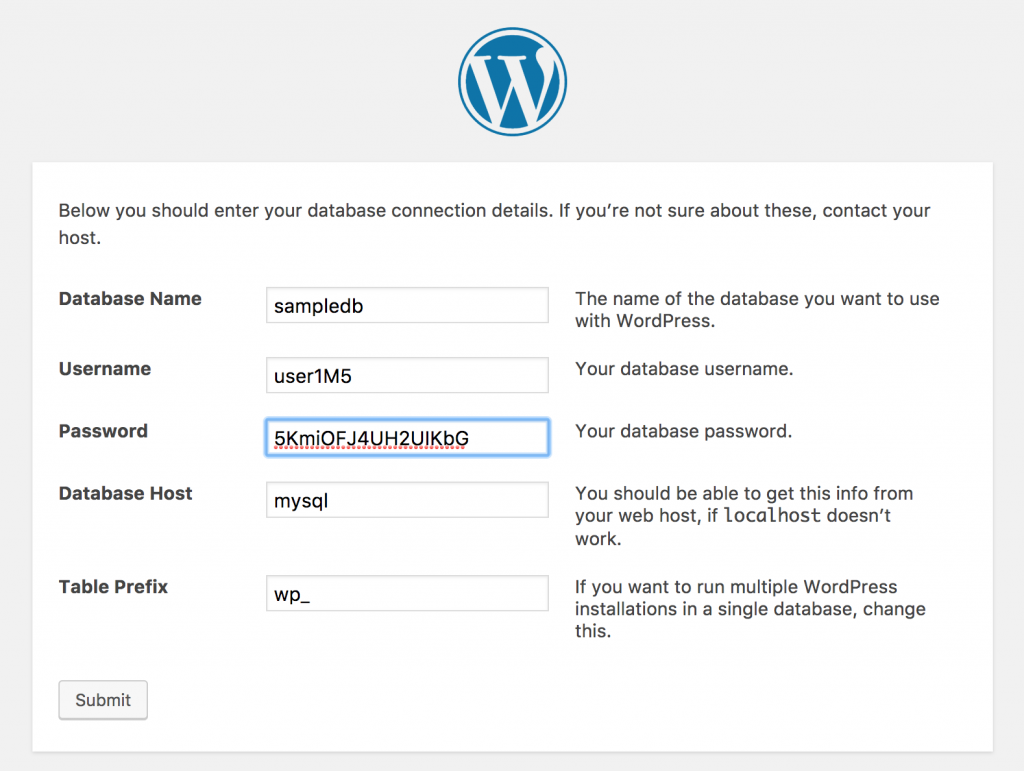

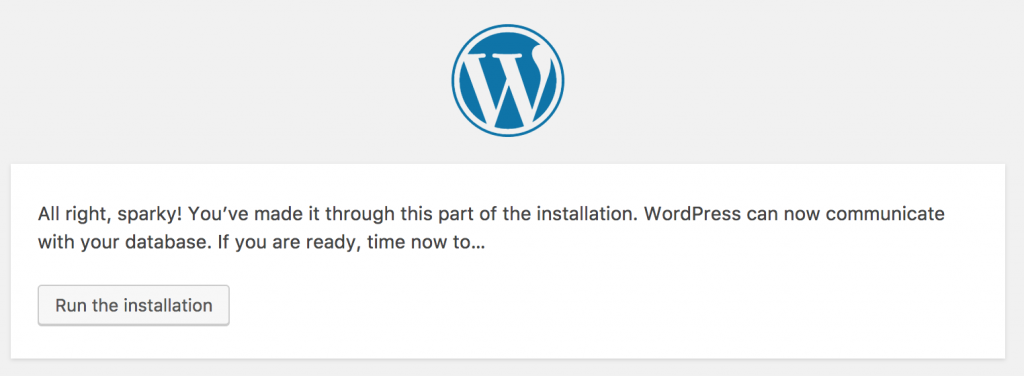

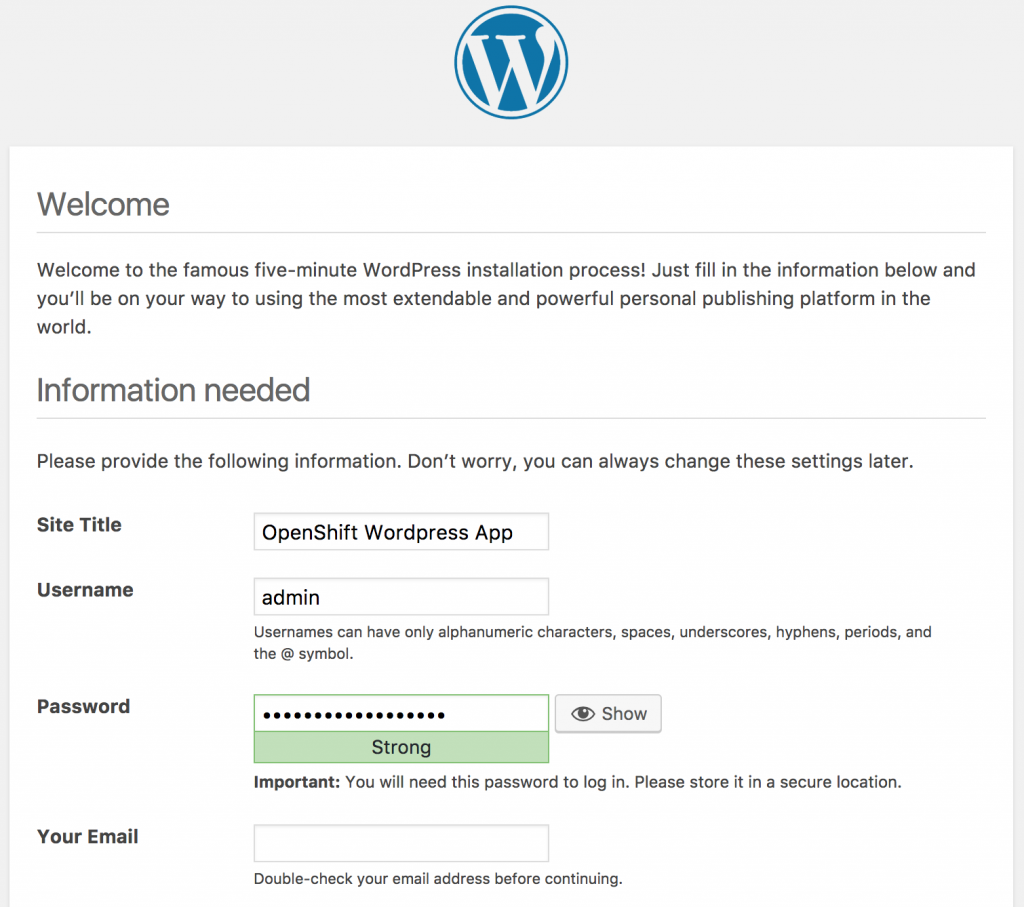

Next, you open the URL http://wordpress-testing.paas.domain.com/ and see the WordPress install menu where you start configuring the database connection using the info I got from OpenShift when I created the MySQL pod:

I can customise the DB connection settings if I want to have a more permanent solution:

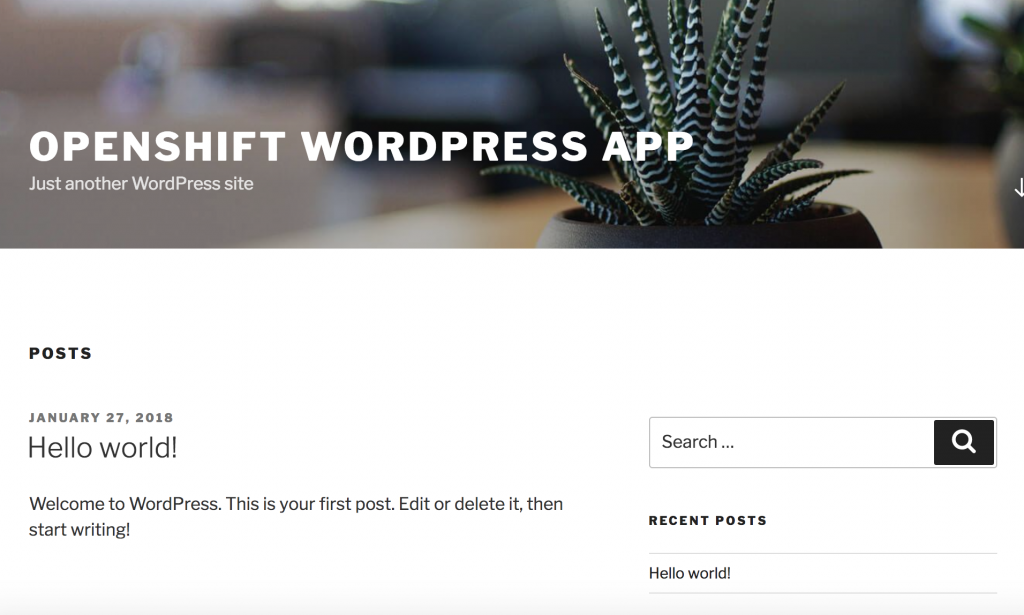

A voilà, your WordPress is deployed in OpenShift:

This is just a very simple example but it took me less than a minute to deploy the web application. Read also my other post about Deploying OpenShift Origin Cluster using Ansible.

Please share your feedback.