Throughout my career I have used various load balancing platforms, from commercial products like F5 or Citrix NetScaler to open source software like HA proxy. All of them do their job of balancing traffic between servers but the biggest problem is the scalability: yes you can deploy more load balancers but the config is static bound to the appliance.

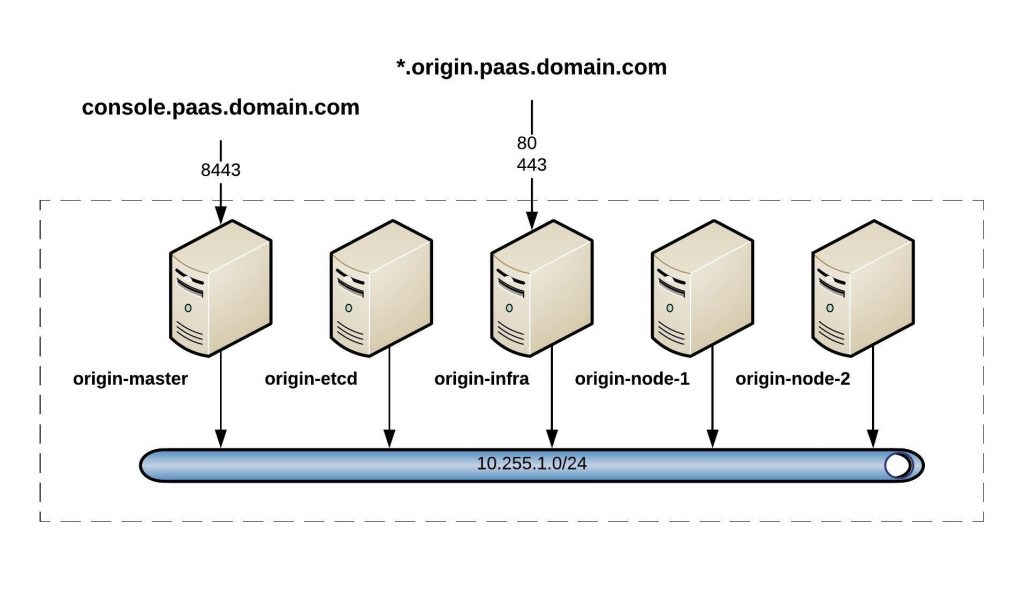

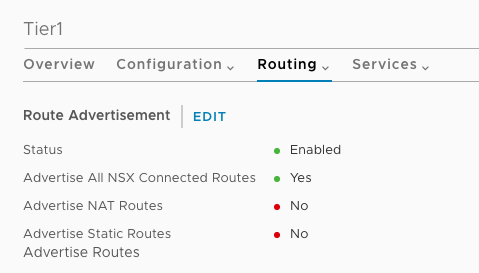

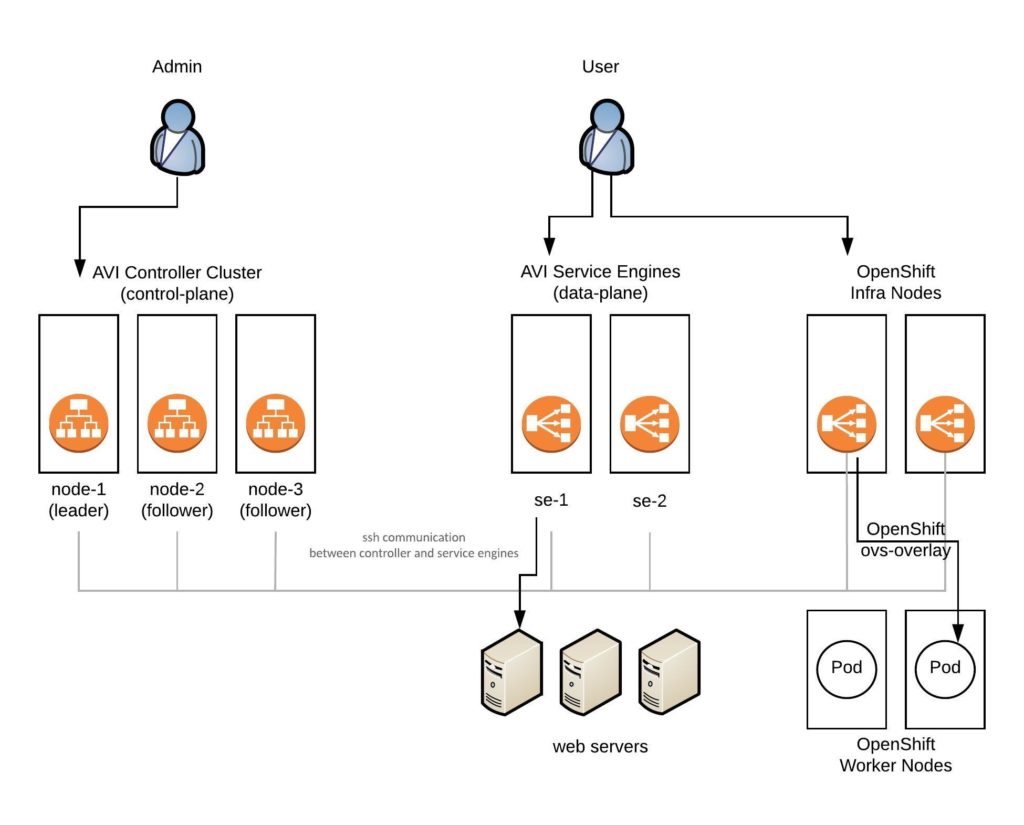

AVI Networks has a very interesting concept of moving away from the traditional idea of load balancing and solving this problem by decoupling the control-plane from the data-plane which makes the load balancing Service Engines basically just forward traffic and can be more easily scaled-out when needed. Another nice advantage is that these Service Engines are container based and can run on basically every type of infrastructure from Bare Metal, on VMs to modern containerized platforms like Kubernetes or OpenShift:

All the AVI components are running as container image on any type of infrastructure or platform architecture which makes the deployment very easy to run on-premise or cloud systems.

The Service Engines on Hypervisor or Base-metal servers need network cards which support Intel’s DPDK for better packet forwarding. Have a look at the AVI linux server deployment guide: https://avinetworks.com/docs/latest/installing-avi-vantage-for-a-linux-server-cloud/

Here now, is a basic step-by-step guide on how to install the AVI Vantage Controller and additional Service Engines. Have a look at the AVI Knowledge-Base where the install is explained in detail: https://avinetworks.com/docs/latest/installing-avi-vantage-for-a-linux-server-cloud/

Here is the link to my Vagrant environment: https://github.com/berndonline/avi-lab-vagrant

Let’s start with the manual AVI Controller installation:

[vagrant@localhost ~]$ sudo ./avi_baremetal_setup.py

AviVantage Version Tag: 17.2.11-9014

Found disk with largest capacity at [/]

Welcome to Avi Initialization Script

Pre-requisites: This script assumes the below utilities are installed:

docker (yum -y install docker/apt-get install docker.io)

Supported Vers: OEL - 6.5,6.7,6.9,7.0,7.1,7.2,7.3,7.4 Centos/RHEL - 7.0,7.1,7.2,7.3,7.4, Ubuntu - 14.04,16.04

Do you want to run Avi Controller on this Host [y/n] y

Do you want to run Avi SE on this Host [y/n] n

Enter The Number Of Cores For Avi Controller. Range [4, 4] 4

Please Enter Memory (in GB) for Avi Controller. Range [12, 7]

Please enter directory path for Avi Controller Config (Default [/opt/avi/controller/data/])

Please enter disk size (in GB) for Avi Controller Config (Default [30G]) 10

Do you have separate partition for Avi Controller Metrics ? If yes, please enter directory path, else leave it blank

Do you have separate partition for Avi Controller Client Logs ? If yes, please enter directory path, else leave it blank

Please enter Controller IP (Default [10.255.1.232])

Enter the Controller SSH port. (Default [5098])

Enter the Controller system-internal portal port. (Default [8443])

AviVantage Version Tag: 17.2.11-9014

AviVantage Version Tag: 17.2.11-9014

Run SE : No

Run Controller : Yes

Controller Cores : 4

Memory(GB) : 7

Disk(GB) : 10

Controller IP : 10.255.1.232

Disabling Avi Services...

Loading Avi CONTROLLER Image. Please Wait..

Installation Successful. Starting Services..

[vagrant@localhost ~]$

[vagrant@localhost ~]$ sudo systemctl start avicontroller

Or as a single command without interactive mode:

[vagrant@localhost ~]$ sudo ./avi_baremetal_setup.py -c -cd 10 -cc 4 -cm 7 -i 10.255.1.232 AviVantage Version Tag: 17.2.11-9014 Found disk with largest capacity at [/] AviVantage Version Tag: 17.2.11-9014 AviVantage Version Tag: 17.2.11-9014 Run SE : No Run Controller : Yes Controller Cores : 4 Memory(GB) : 7 Disk(GB) : 10 Controller IP : 10.255.1.232 Disabling Avi Services... Loading Avi CONTROLLER Image. Please Wait.. Installation Successful. Starting Services.. [vagrant@localhost ~]$ [vagrant@localhost ~]$ sudo systemctl start avicontroller

The installer basically installed a container image on the server which runs the AVI Controller:

[vagrant@localhost ~]$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES c689435f74fd avinetworks/controller:17.2.11-9014 "/opt/avi/scripts/do…" About a minute ago Up About a minute 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp, 0.0.0.0:5054->5054/tcp, 0.0.0.0:5098->5098/tcp, 0.0.0.0:8443->8443/tcp, 0.0.0.0:161->161/udp avicontroller [vagrant@localhost ~]$

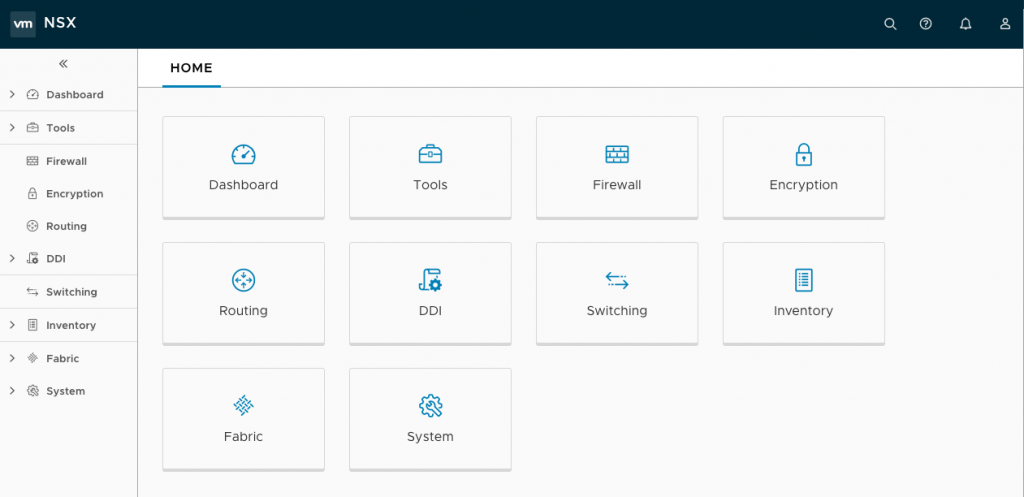

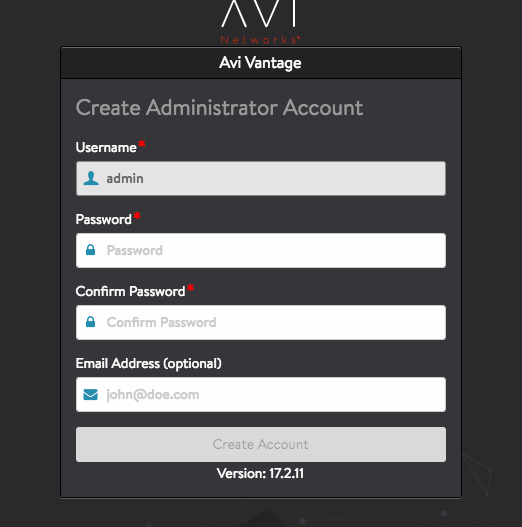

Next you can connect via the web console to change the password and finalise the configuration to configure DNS, NTP and SMTP:

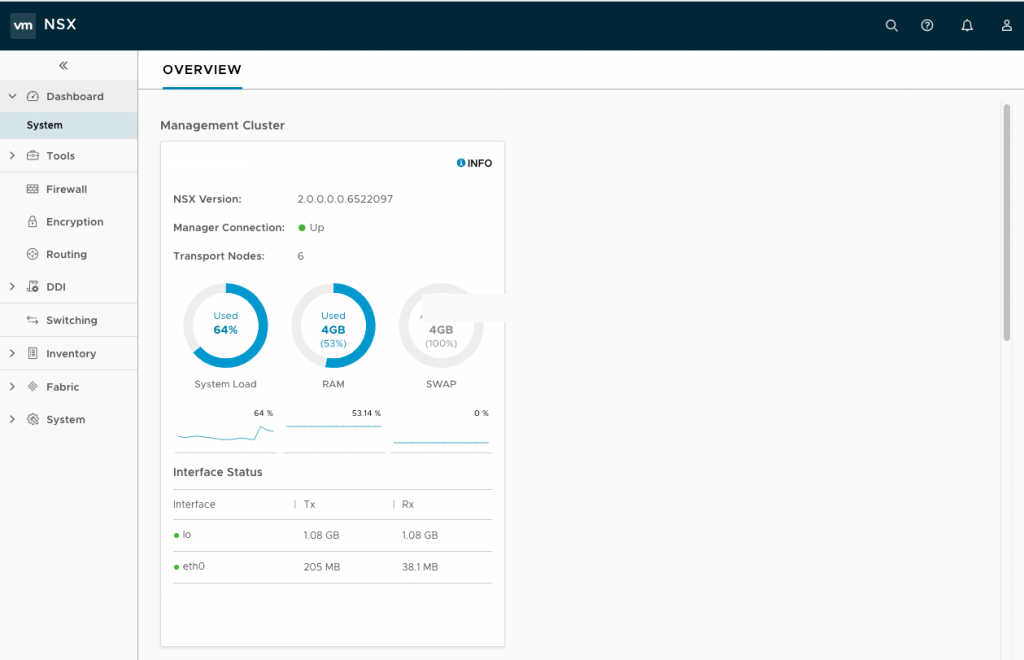

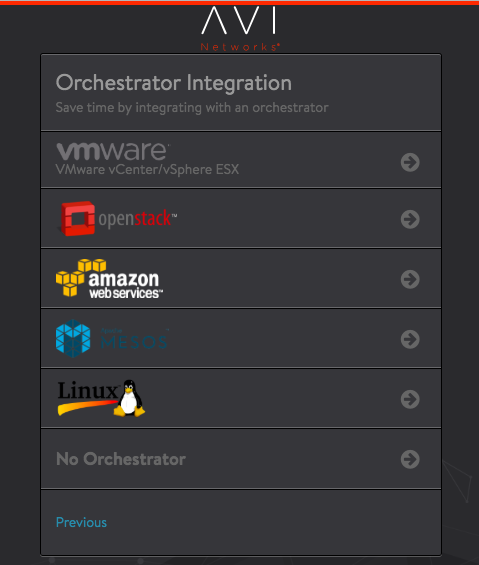

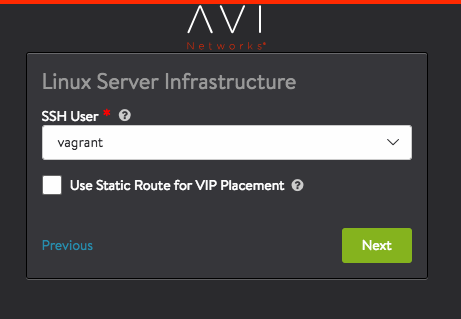

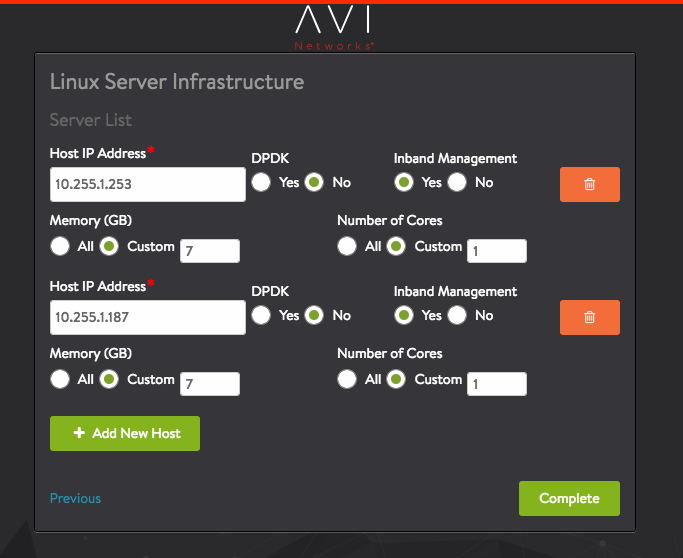

When you get to the menu Orchestrator integration you can put in the details for the controller to install additional service engines:

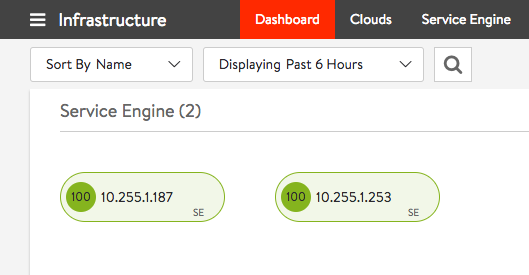

In the meantime the AVI Controller installs the specified Service Engines in the background, which automatically appear once this is completed under the infrastructure menu:

Like with the AVI Controller, the Service Engines run as container image:

[vagrant@localhost ~]$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 2c6b207ed376 avinetworks/se:17.2.11-9014 "/opt/avi/scripts/do…" 51 seconds ago Up 50 seconds avise [vagrant@localhost ~]$

The next article will be about automatically deploying the AVI Controller and Service Engines via Ansible, and looking into how to integrate AVI with OpenShift.

Please share your feedback and leave a comment.