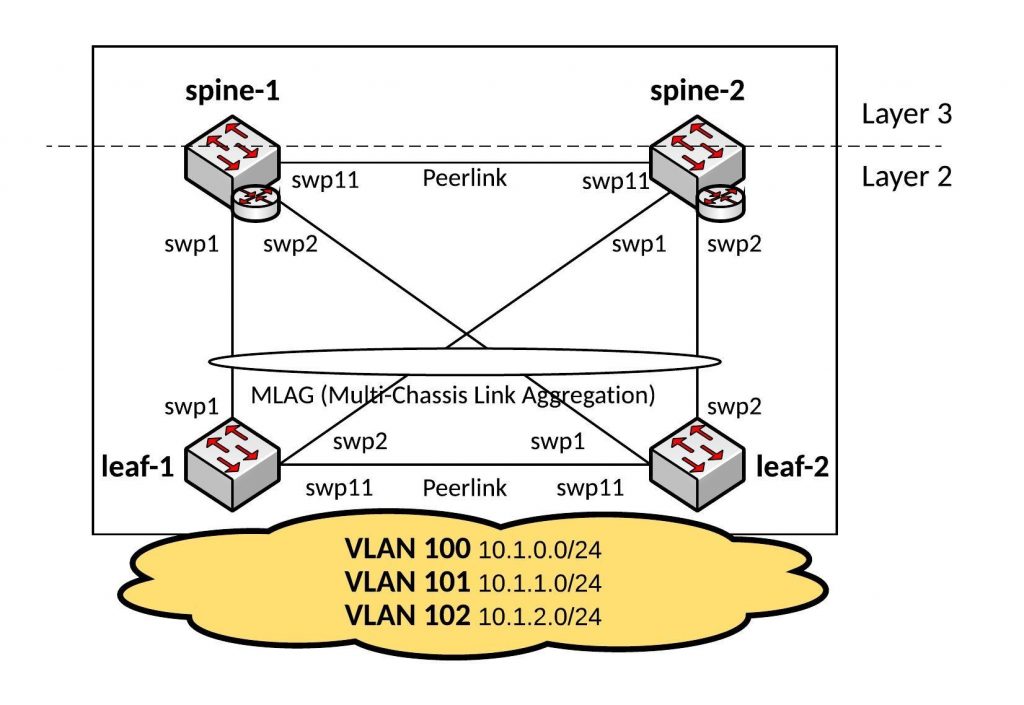

Here a basic Ansible Playbook for a Cumulus Linux lab which I use for testing. Similar spine and leaf configuration I used in my recent data centre redesign, the playbook includes one VRF and all SVIs are joined the VRF.

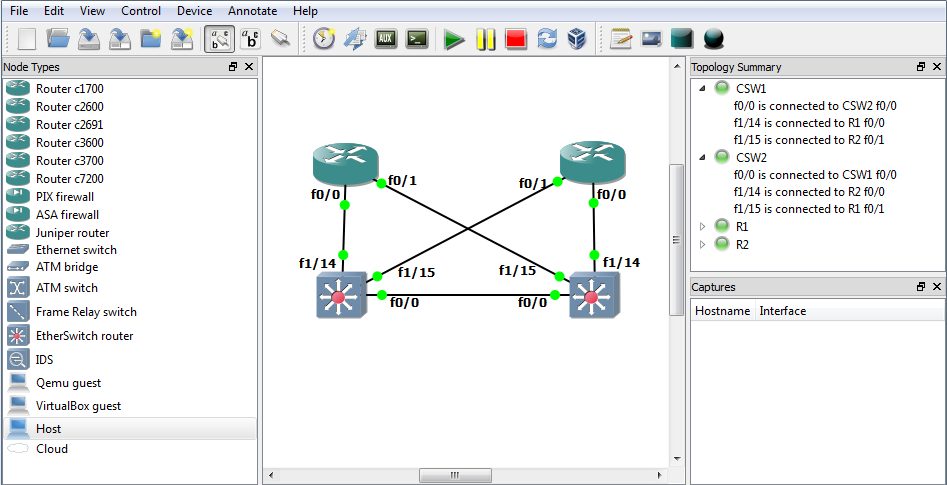

I use the Cumulus VX appliance under GNS3 which you can get for free at Cumulus: https://cumulusnetworks.com/products/cumulus-vx/

The first step is to configure the management interface of the Cumulus switches, edit /etc/network/interfaces and afterwards run “ifreload -a” to appy the config changes:

auto eth0 iface eth0 address 192.168.100.20x/24 gateway 192.168.100.2

Hosts file:

[spine] spine-1 spine-2 [leaf] leaf-1 leaf-2

Before you start, you should push your ssh keys and set the hostname to prepare the switches.

Now we are ready to deploy the interface configuration for the Layer 2 Fabric, below the interfaces.yml file.

---

- hosts: all

remote_user: cumulus

gather_facts: no

become: yes

vars:

ansible_become_pass: "CumulusLinux!"

spine_interfaces:

- { clag: bond1, desc: downlink-leaf, clagid: 1, port: swp1 swp2 }

spine_bridge_ports: "peerlink bond1"

bridge_vlans: "100-199"

spine_vrf: "vrf-prod"

spine_bridge:

- { desc: web, vlan: 100, address: "{{ vlan100_address }}", address_virtual: "00:00:5e:00:01:00 10.1.0.254/24", vrf: vrf-prod }

- { desc: app, vlan: 101, address: "{{ vlan101_address }}", address_virtual: "00:00:5e:00:01:01 10.1.1.254/24", vrf: vrf-prod }

- { desc: db, vlan: 102, address: "{{ vlan102_address }}", address_virtual: "00:00:5e:00:01:02 10.1.2.254/24", vrf: vrf-prod }

leaf_interfaces:

- { clag: bond1, desc: uplink-spine, clagid: 1, port: swp1 swp2 }

leaf_access_interfaces:

- { desc: web-server, vlan: 100, port: swp3 }

- { desc: app-server, vlan: 101, port: swp4 }

- { desc: db-server, vlan: 102, port: swp5 }

leaf_bridge_ports: "bond1 swp3 swp4 swp5"

handlers:

- name: ifreload

command: ifreload -a

tasks:

- name: deploys spine interface configuration

template: src=templates/spine_interfaces.j2 dest=/etc/network/interfaces

when: "'spine' in group_names"

notify: ifreload

- name: deploys leaf interface configuration

template: src=templates/leaf_interfaces.j2 dest=/etc/network/interfaces

when: "'leaf' in group_names"

notify: ifreload

I use Jinja2 templates for the interfaces configuration.

Here the output from the Ansible Playbook which only takes a few seconds to run:

[root@ansible cumulus]$ ansible-playbook interfaces.yml -i hosts PLAY [all] ********************************************************************* TASK [deploys spine interface configuration] *********************************** skipping: [leaf-2] skipping: [leaf-1] changed: [spine-2] changed: [spine-1] TASK [deploys leaf interface configuration] ************************************ skipping: [spine-1] skipping: [spine-2] changed: [leaf-1] changed: [leaf-2] RUNNING HANDLER [ifreload] ***************************************************** changed: [leaf-2] changed: [leaf-1] changed: [spine-2] changed: [spine-1] PLAY RECAP ********************************************************************* leaf-1 : ok=2 changed=2 unreachable=0 failed=0 leaf-2 : ok=2 changed=2 unreachable=0 failed=0 spine-1 : ok=2 changed=2 unreachable=0 failed=0 spine-2 : ok=2 changed=2 unreachable=0 failed=0 [root@ansible cumulus]$

Lets quickly verify the configuration:

cumulus@spine-1:~$ net show int

Name Master Speed MTU Mode Remote Host Remote Port Summary

-- ------------- -------- ------- ----- ------------- ------------- ------------- ---------------------------------

UP lo None N/A 65536 Loopback IP: 127.0.0.1/8, ::1/128

UP eth0 None 1G 1500 Mgmt cumulus eth0 IP: 192.168.100.205/24

UP bond1 bridge 2G 1500 Bond/Trunk Bond Members: swp1(UP), swp2(UP)

UP bridge None N/A 1500 Bridge/L2 Untagged Members: bond1, peerlink

UP bridge-100-v0 vrf-prod N/A 1500 Interface/L3 IP: 10.1.0.254/24

UP bridge-101-v0 vrf-prod N/A 1500 Interface/L3 IP: 10.1.1.254/24

UP bridge-102-v0 vrf-prod N/A 1500 Interface/L3 IP: 10.1.2.254/24

UP bridge.100 vrf-prod N/A 1500 SVI/L3 IP: 10.1.0.252/24

UP bridge.101 vrf-prod N/A 1500 SVI/L3 IP: 10.1.1.252/24

UP bridge.102 vrf-prod N/A 1500 SVI/L3 IP: 10.1.2.252/24

UP peerlink bridge 1G 1500 Bond/Trunk Bond Members: swp11(UP)

UP peerlink.4094 None 1G 1500 SubInt/L3 IP: 169.254.1.1/30

UP vrf-prod None N/A 65536 NotConfigured

cumulus@spine-1:~$

cumulus@spine-1:~$ net show lldp

LocalPort Speed Mode RemotePort RemoteHost Summary

----------- ------- ---------- ------------ ------------ ----------------------

eth0 1G Mgmt eth0 cumulus IP: 192.168.100.205/24

==== eth0 spine-2

==== eth0 leaf-1

==== eth0 leaf-2

swp1 1G BondMember swp1 leaf-1 Master: bond1(UP)

swp2 1G BondMember swp2 leaf-2 Master: bond1(UP)

swp11 1G BondMember swp11 spine-2 Master: peerlink(UP)

cumulus@spine-1:~$

cumulus@leaf-1:~$ net show int

Name Master Speed MTU Mode Remote Host Remote Port Summary

-- ------------- -------- ------- ----- ---------- ------------- ------------- --------------------------------

UP lo None N/A 65536 Loopback IP: 127.0.0.1/8, ::1/128

UP eth0 None 1G 1500 Mgmt cumulus eth0 IP: 192.168.100.207/24

UP swp3 bridge 1G 1500 Access/L2 Untagged VLAN: 100

UP swp4 bridge 1G 1500 Access/L2 Untagged VLAN: 101

UP swp5 bridge 1G 1500 Access/L2 Untagged VLAN: 102

UP bond1 bridge 2G 1500 Bond/Trunk Bond Members: swp1(UP), swp2(UP)

UP bridge None N/A 1500 Bridge/L2 Untagged Members: bond1, swp3-5

UP peerlink None 1G 1500 Bond Bond Members: swp11(UP)

UP peerlink.4093 None 1G 1500 SubInt/L3 IP: 169.254.1.1/30

cumulus@leaf-1:~$ net show lldp

LocalPort Speed Mode RemotePort RemoteHost Summary

----------- ------- ---------- ------------ ------------ ----------------------

eth0 1G Mgmt eth0 cumulus IP: 192.168.100.207/24

==== eth0 spine-2

==== eth0 spine-1

==== eth0 leaf-2

swp1 1G BondMember swp1 spine-1 Master: bond1(UP)

swp2 1G BondMember swp1 spine-2 Master: bond1(UP)

swp11 1G BondMember swp11 leaf-2 Master: peerlink(UP)

cumulus@leaf-1:~$

As you can see the configuration is correctly deployed and you can start testing.

The configuration for a real datacentre Fabric is of course, more complex (multiple VRFs, SVIs and complex Routing) but with Ansible you can quickly deploy and manage hundreds of switches.

In one of the next posts, I will write an Ansible Playbook for a Layer 3 datacentre Fabric configuration using BGP and ECMP routing on Quagga.

Read my new post about an Ansible Playbook for Cumulus Linux BGP IP-Fabric and Cumulus NetQ Validation.