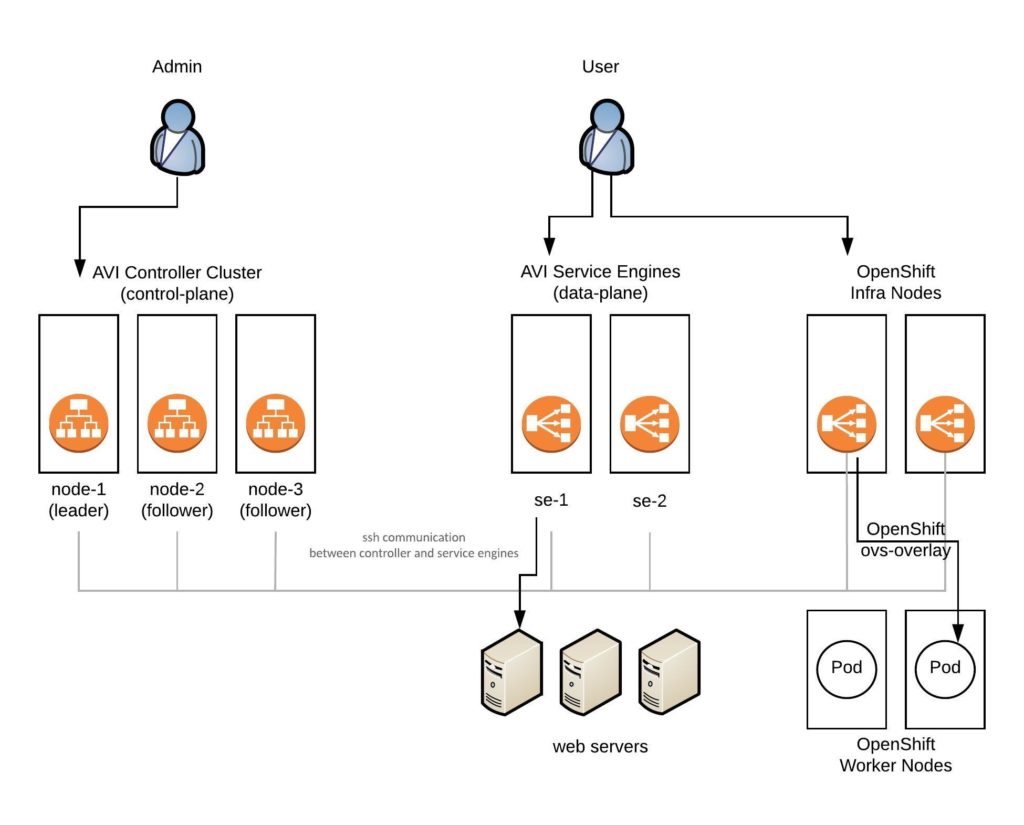

After my first blog post about Software defined Load Balancing with AVI Networks, here is how to automatically deploy AVI controller and services engines via Ansible.

Here are the links to my repositories; AVI Vagrant environment: https://github.com/berndonline/avi-lab-vagrant and AVI Ansible Playbook: https://github.com/berndonline/avi-lab-provision

Make sure that your vagrant environment is running,

berndonline@lab:~/avi-lab-vagrant$ vagrant status Current machine states: avi-controller-1 running (libvirt) avi-controller-2 running (libvirt) avi-controller-3 running (libvirt) avi-se-1 running (libvirt) avi-se-2 running (libvirt) This environment represents multiple VMs. The VMs are all listed above with their current state. For more information about a specific VM, run `vagrant status NAME`.

I needed to modify the ansible.cfg to integrate a filter plugin:

[defaults] inventory = ./.vagrant/provisioners/ansible/inventory/vagrant_ansible_inventory host_key_checking=False library = /home/berndonline/avi-lab-provision/lib filter_plugins = /home/berndonline/avi-lab-provision/lib/filter_plugins

The controller installation is actually very simple and I got it from the official AVI ansible role they created, I added a second role to check ones the controller nodes are successfully booted:

---

- hosts: avi-controller

user: '{{ ansible_ssh_user }}'

gather_facts: "true"

roles:

- {role: ansible-role-avicontroller, become: true}

- {role: avi-post-controller, become: false}

There’s one important thing to know before we run the playbook. When you have an AVI subscription you get custom container images with a predefined default password which makes it easier for you to do the cluster setup fully automated. You find the default password variable in group_vars/all.yml there you set as well if the password should be changed.

Let’s execute the ansible playbook, it takes a bit time for the three nodes to boot up:

berndonline@lab:~/avi-lab-vagrant$ ansible-playbook ../avi-lab-provision/playbooks/avi-controller-install.yml PLAY [avi-controller] ********************************************************************************************************************************************* TASK [Gathering Facts] ******************************************************************************************************************************************** ok: [avi-controller-3] ok: [avi-controller-2] ok: [avi-controller-1] TASK [ansible-role-avicontroller : Avi Controller | Deployment] *************************************************************************************************** included: /home/berndonline/avi-lab-provision/roles/ansible-role-avicontroller/tasks/docker/main.yml for avi-controller-1, avi-controller-2, avi-controller-3 TASK [ansible-role-avicontroller : Avi Controller | Services | systemd | Check if Avi Controller installed] ******************************************************* included: /home/berndonline/avi-lab-provision/roles/ansible-role-avicontroller/tasks/docker/services/systemd/check.yml for avi-controller-1, avi-controller-2, avi-controller-3 TASK [ansible-role-avicontroller : Avi Controller | Check if Avi Controller installed] **************************************************************************** ok: [avi-controller-3] ok: [avi-controller-2] ok: [avi-controller-1] TASK [ansible-role-avicontroller : Avi Controller | Services | init.d | Check if Avi Controller installed] ******************************************************** skipping: [avi-controller-1] skipping: [avi-controller-2] skipping: [avi-controller-3] TASK [ansible-role-avicontroller : Avi Controller | Check minimum requirements] *********************************************************************************** included: /home/berndonline/avi-lab-provision/roles/ansible-role-avicontroller/tasks/docker/requirements.yml for avi-controller-1, avi-controller-2, avi-controller-3 TASK [ansible-role-avicontroller : Avi Controller | Requirements | Check for docker] ****************************************************************************** ok: [avi-controller-2] ok: [avi-controller-3] ok: [avi-controller-1] ... TASK [avi-post-controller : wait for cluster nodes up] ************************************************************************************************************ FAILED - RETRYING: wait for cluster nodes up (30 retries left). FAILED - RETRYING: wait for cluster nodes up (30 retries left). FAILED - RETRYING: wait for cluster nodes up (30 retries left). ... FAILED - RETRYING: wait for cluster nodes up (7 retries left). FAILED - RETRYING: wait for cluster nodes up (8 retries left). FAILED - RETRYING: wait for cluster nodes up (7 retries left). FAILED - RETRYING: wait for cluster nodes up (7 retries left). ok: [avi-controller-2] ok: [avi-controller-3] ok: [avi-controller-1] PLAY RECAP ******************************************************************************************************************************************************** avi-controller-1 : ok=36 changed=6 unreachable=0 failed=0 avi-controller-2 : ok=35 changed=5 unreachable=0 failed=0 avi-controller-3 : ok=35 changed=5 unreachable=0 failed=0 berndonline@lab:~/avi-lab-vagrant$

We are not finished yet and need to set basic settings like NTP and DNS, and need to configure the AVI three node controller cluster with another playbook:

---

- hosts: localhost

connection: local

roles:

- {role: avi-cluster-setup, become: false}

- {role: avi-change-password, become: false, when: avi_change_password == true}

The first role uses the REST API to do the configuration changes and requires the AVI ansible sdk role and for these reason it is very useful using the custom subscription images because you know the default password otherwise you need to modify the main setup.json file.

Let’s run the AVI cluster setup playbook:

berndonline@lab:~/avi-lab-vagrant$ ansible-playbook ../avi-lab-provision/playbooks/avi-cluster-setup.yml

PLAY [localhost] **************************************************************************************************************************************************

TASK [Gathering Facts] ********************************************************************************************************************************************

ok: [localhost]

TASK [ansible-role-avisdk : Checking if avisdk python library is present] *****************************************************************************************

ok: [localhost] => {

"msg": "Please make sure avisdk is installed via pip. 'pip install avisdk --upgrade'"

}

TASK [avi-cluster-setup : set AVI dns and ntp facts] **************************************************************************************************************

ok: [localhost]

TASK [avi-cluster-setup : set AVI cluster facts] ******************************************************************************************************************

ok: [localhost]

TASK [avi-cluster-setup : configure ntp and dns controller nodes] *************************************************************************************************

changed: [localhost]

TASK [avi-cluster-setup : configure AVI cluster] ******************************************************************************************************************

changed: [localhost]

TASK [avi-cluster-setup : wait for cluster become active] *********************************************************************************************************

FAILED - RETRYING: wait for cluster become active (30 retries left).

FAILED - RETRYING: wait for cluster become active (29 retries left).

FAILED - RETRYING: wait for cluster become active (28 retries left).

...

FAILED - RETRYING: wait for cluster become active (14 retries left).

FAILED - RETRYING: wait for cluster become active (13 retries left).

FAILED - RETRYING: wait for cluster become active (12 retries left).

ok: [localhost]

TASK [avi-change-password : change default admin password on cluster build when subscription] *********************************************************************

skipping: [localhost]

PLAY RECAP ********************************************************************************************************************************************************

localhost : ok=7 changed=2 unreachable=0 failed=0

berndonline@lab:~/avi-lab-vagrant$

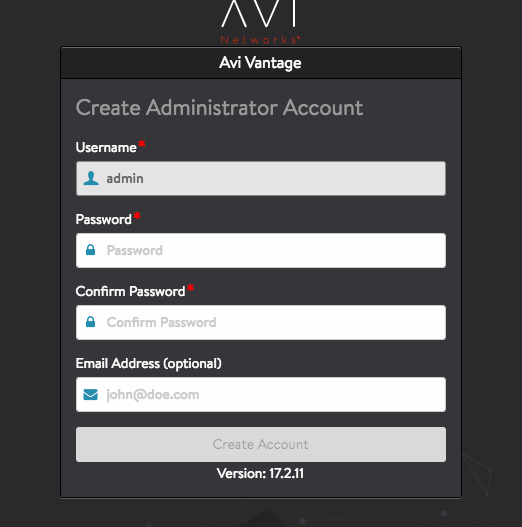

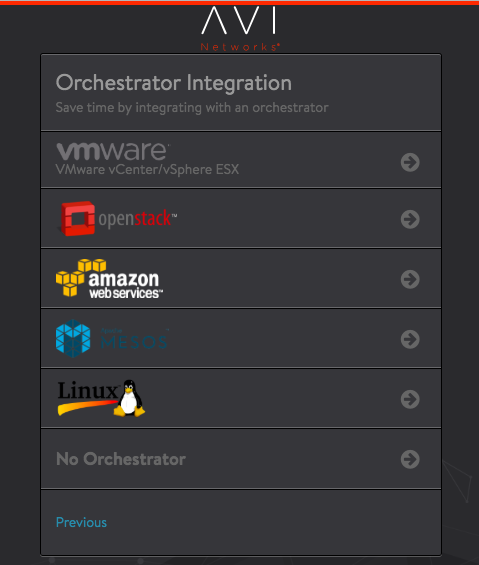

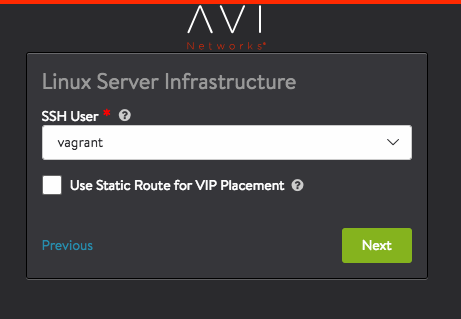

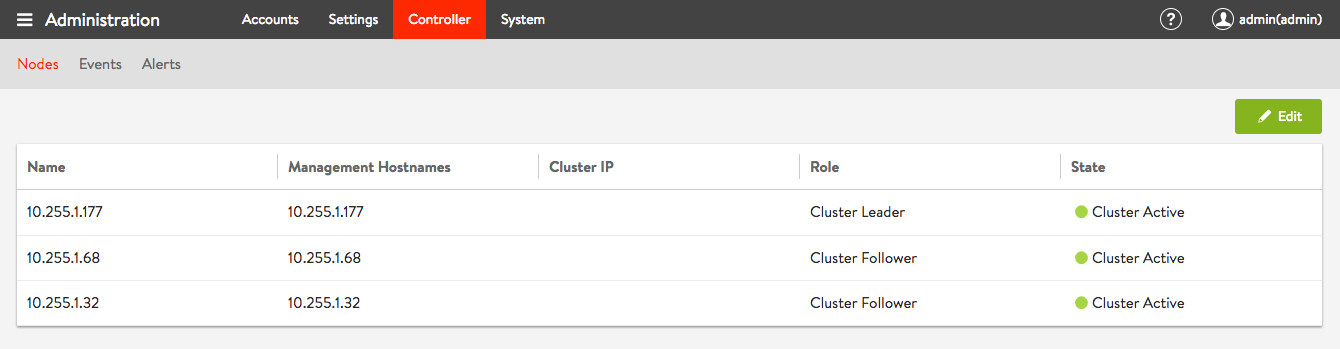

We can check in the web console to see if the cluster is booted and correctly setup:

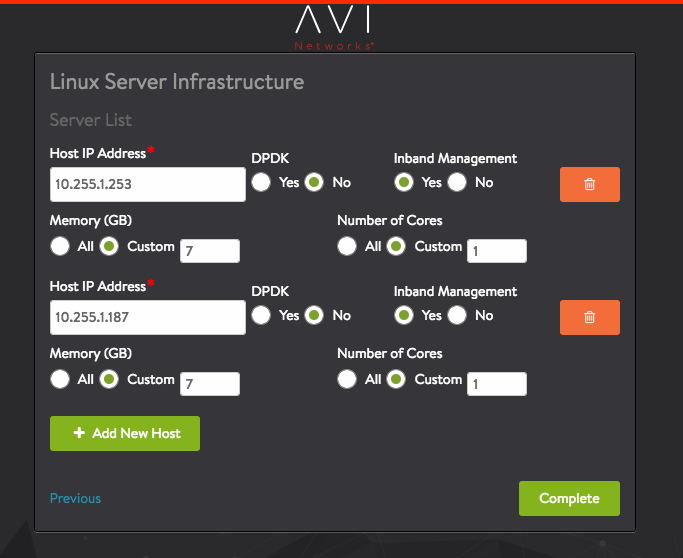

Last but not least we need the ansible playbook for the AVI service engines installation which relies on the official AVI ansible se role:

---

- hosts: avi-se

user: '{{ ansible_ssh_user }}'

gather_facts: "true"

roles:

- {role: ansible-role-avise, become: true}

Let’s run the playbook for the service engines installation:

berndonline@lab:~/avi-lab-vagrant$ ansible-playbook ../avi-lab-provision/playbooks/avi-se-install.yml

PLAY [avi-se] *****************************************************************************************************************************************************

TASK [Gathering Facts] ********************************************************************************************************************************************

ok: [avi-se-2]

ok: [avi-se-1]

TASK [ansible-role-avisdk : Checking if avisdk python library is present] *****************************************************************************************

ok: [avi-se-1] => {

"msg": "Please make sure avisdk is installed via pip. 'pip install avisdk --upgrade'"

}

ok: [avi-se-2] => {

"msg": "Please make sure avisdk is installed via pip. 'pip install avisdk --upgrade'"

}

TASK [ansible-role-avise : Avi SE | Set facts] ********************************************************************************************************************

skipping: [avi-se-1]

skipping: [avi-se-2]

TASK [ansible-role-avise : Avi SE | Deployment] *******************************************************************************************************************

included: /home/berndonline/avi-lab-provision/roles/ansible-role-avise/tasks/docker/main.yml for avi-se-1, avi-se-2

TASK [ansible-role-avise : Avi SE | Check minimum requirements] ***************************************************************************************************

included: /home/berndonline/avi-lab-provision/roles/ansible-role-avise/tasks/docker/requirements.yml for avi-se-1, avi-se-2

TASK [ansible-role-avise : Avi SE | Requirements | Check for docker] **********************************************************************************************

ok: [avi-se-2]

ok: [avi-se-1]

TASK [ansible-role-avise : Avi SE | Requirements | Set facts] *****************************************************************************************************

ok: [avi-se-1]

ok: [avi-se-2]

TASK [ansible-role-avise : Avi SE | Requirements | Validate Parameters] *******************************************************************************************

ok: [avi-se-1] => {

"changed": false,

"msg": "All assertions passed"

}

ok: [avi-se-2] => {

"changed": false,

"msg": "All assertions passed"

}

...

TASK [ansible-role-avise : Avi SE | Services | systemd | Start the service since it's not running] ****************************************************************

changed: [avi-se-1]

changed: [avi-se-2]

RUNNING HANDLER [ansible-role-avise : Avi SE | Services | systemd | Daemon reload] ********************************************************************************

ok: [avi-se-2]

ok: [avi-se-1]

RUNNING HANDLER [ansible-role-avise : Avi SE | Services | Restart the avise service] ******************************************************************************

changed: [avi-se-2]

changed: [avi-se-1]

PLAY RECAP ********************************************************************************************************************************************************

avi-se-1 : ok=47 changed=7 unreachable=0 failed=0

avi-se-2 : ok=47 changed=7 unreachable=0 failed=0

berndonline@lab:~/avi-lab-vagrant$

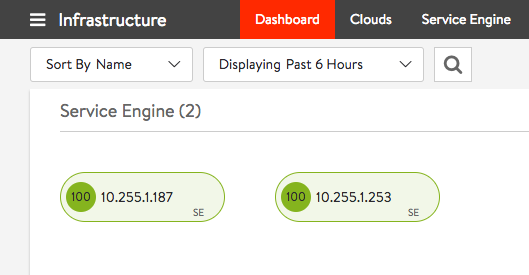

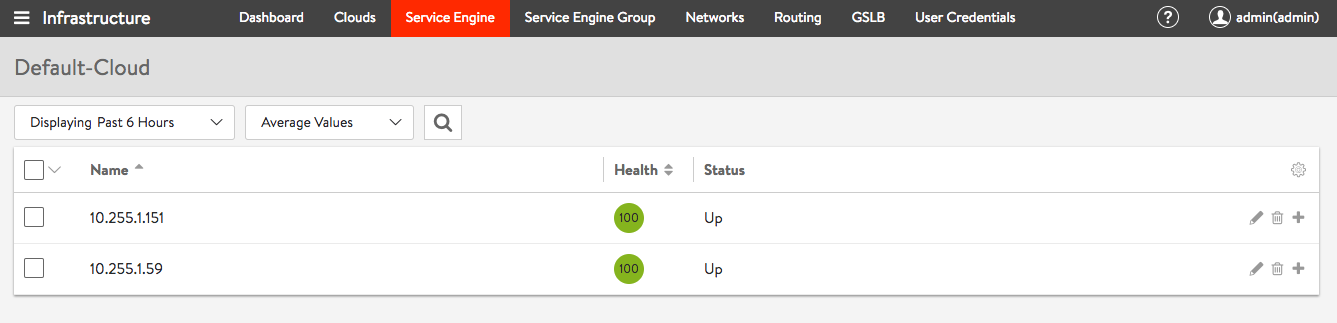

After a few minutes you see the AVI service engines automatically register on the controller cluster and you are ready start configuring the detailed load balancing configuration:

Please share your feedback and leave a comment.