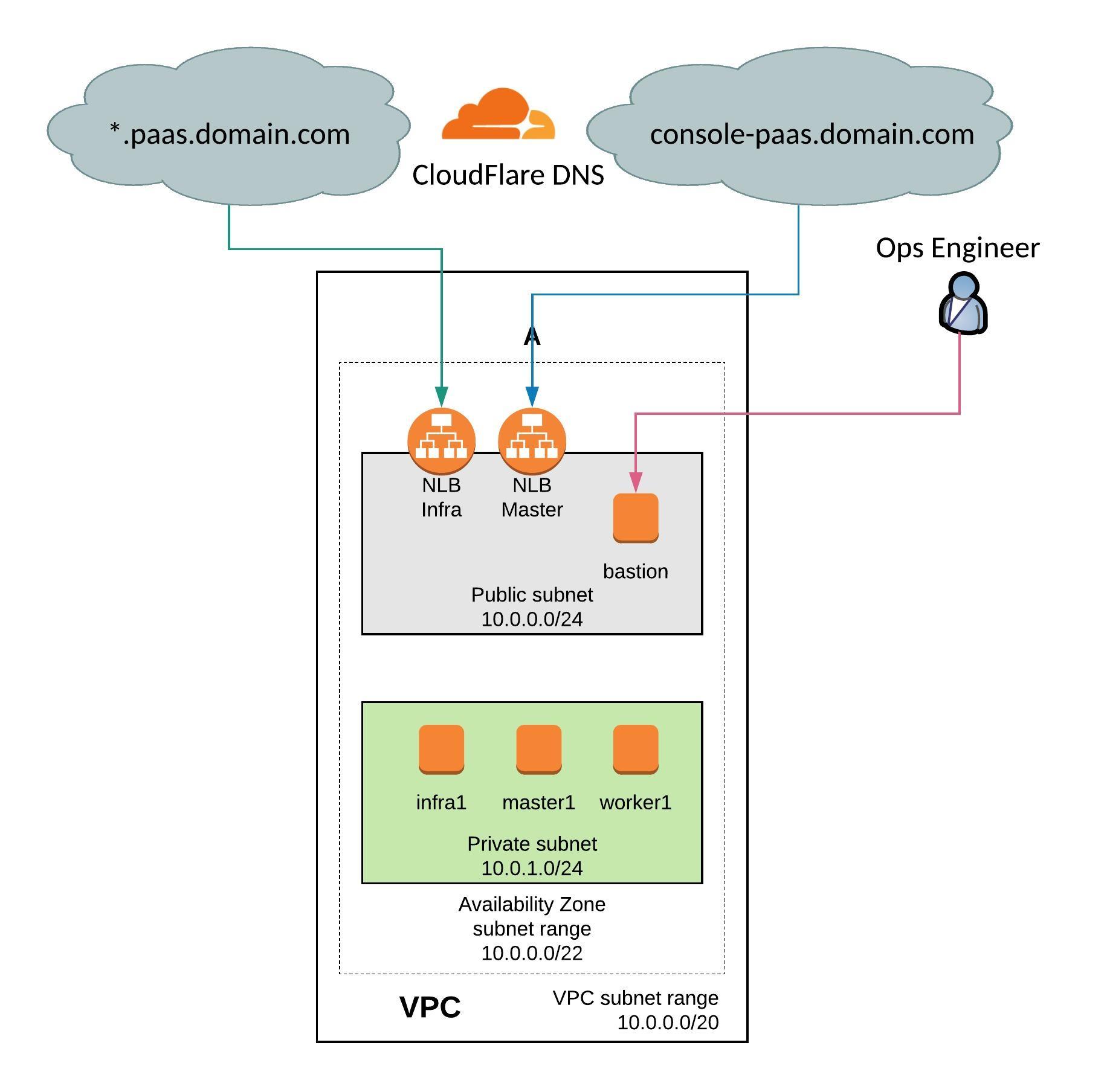

I have done a few changes on my Terraform configuration for OpenShift 3.11 on Amazon AWS. I have downsized the environment because I didn’t needed that many nodes for a quick test setup. I have added CloudFlare DNS to automatically create CNAME for the AWS load balancers on the DNS zone. I have also added an AWS S3 Bucket for storing the backend state. You can find the new Terraform configuration on my Github repository: https://github.com/berndonline/openshift-terraform/tree/aws-dev

From OpenShift 3.10 and later versions the environment variables changes and I modified the ansible-hosts template for the new configuration. You can see the changes in the hosts template: https://github.com/berndonline/openshift-terraform/blob/aws-dev/helper_scripts/ansible-hosts.template.txt

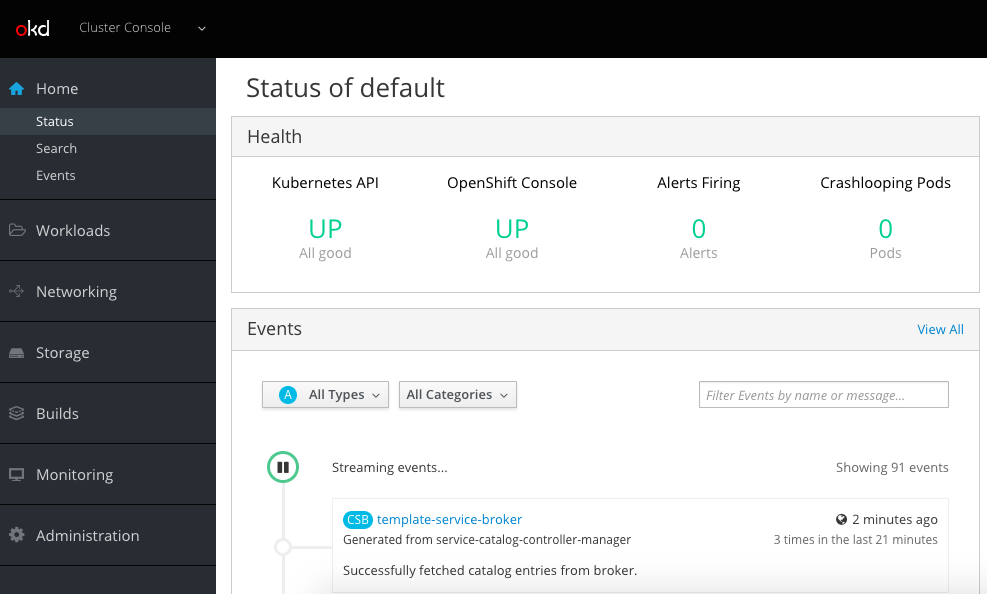

OpenShift 3.11 has changed a few things and put an focus on an Cluster Operator console which is pretty nice and runs on Kubernetes 1.11. I recommend reading the release notes for the 3.11 release for more details: https://docs.openshift.com/container-platform/3.11/release_notes/ocp_3_11_release_notes.html

I don’t wanted to get into too much detail, just follow the steps below and start with cloning my repository, and choose the dev branch:

git clone -b aws-dev https://github.com/berndonline/openshift-terraform.git cd ./openshift-terraform/ ssh-keygen -b 2048 -t rsa -f ./helper_scripts/id_rsa -q -N "" chmod 600 ./helper_scripts/id_rsa

You need to modify the cloudflare.tf and add your CloudFlare API credentials otherwise just delete the file. The same for the S3 backend provider, you find the configuration in the main.tf and it can be removed if not needed.

CloudFlare and Amazon AWS credentials can be added through environment variables:

export AWS_ACCESS_KEY_ID='<-YOUR-AWS-ACCESS-KEY->' export AWS_SECRET_ACCESS_KEY='<-YOUR-AWS-SECRET-KEY->' export TF_VAR_email='<-YOUR-CLOUDFLARE-EMAIL-ADDRESS->' export TF_VAR_token='<-YOUR-CLOUDFLARE-TOKEN->' export TF_VAR_domain='<-YOUR-CLOUDFLARE-DOMAIN->' export TF_VAR_htpasswd='<-YOUR-OPENSHIFT-DEMO-USER-HTPASSWD->'

Run terraform init and apply to create the environment.

terraform init && terraform apply -auto-approve

Copy the ssh key and ansible-hosts file to the bastion host from where you need to run the Ansible OpenShift playbooks.

scp -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -r ./helper_scripts/id_rsa centos@$(terraform output bastion):/home/centos/.ssh/ scp -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -r ./inventory/ansible-hosts centos@$(terraform output bastion):/home/centos/ansible-hosts

I recommend waiting a few minutes as the AWS cloud-init script prepares the bastion host. Afterwards continue with the pre and install playbooks. You can connect to the bastion host and run the playbooks directly.

ssh -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -l centos $(terraform output bastion) -A "cd /openshift-ansible/ && ansible-playbook ./playbooks/openshift-pre.yml -i ~/ansible-hosts" ssh -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -l centos $(terraform output bastion) -A "cd /openshift-ansible/ && ansible-playbook ./playbooks/openshift-install.yml -i ~/ansible-hosts"

If for whatever reason the cluster deployment fails, you can run the uninstall playbook to bring the nodes back into a clean state and start from the beginning and run deploy_cluster.

ssh -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -l centos $(terraform output bastion) -A "cd /openshift-ansible/ && ansible-playbook ./openshift-ansible/playbooks/adhoc/uninstall.yml -i ~/ansible-hosts"

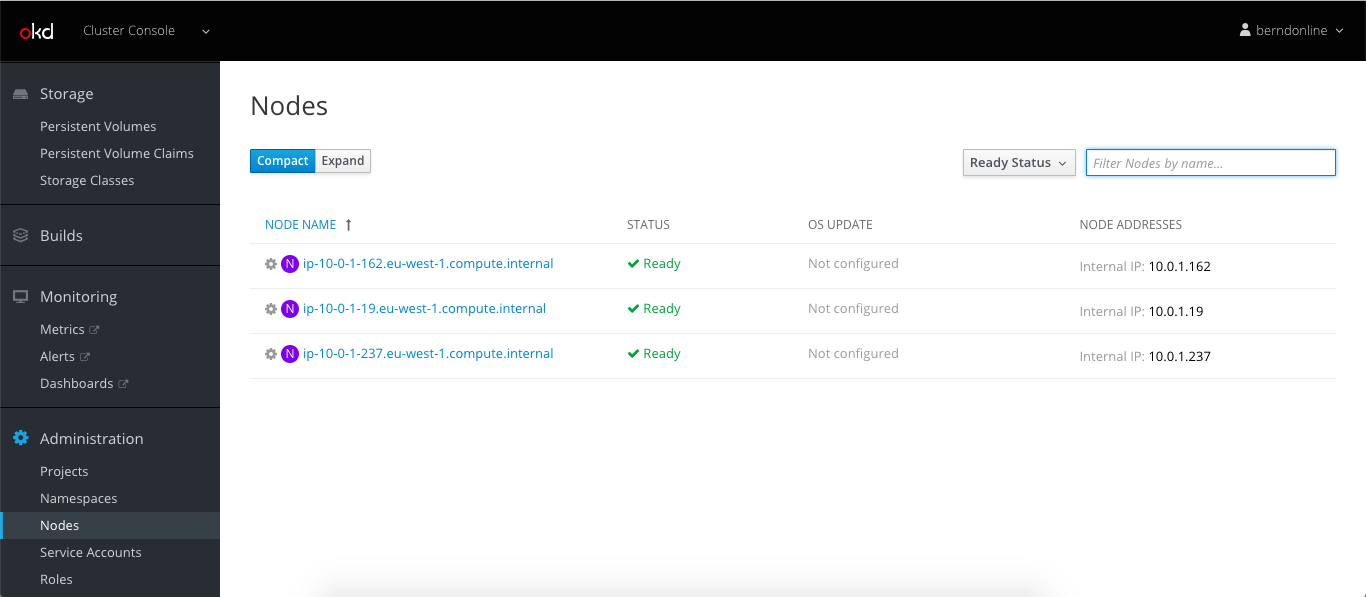

Here are some screenshots of the new cluster console:

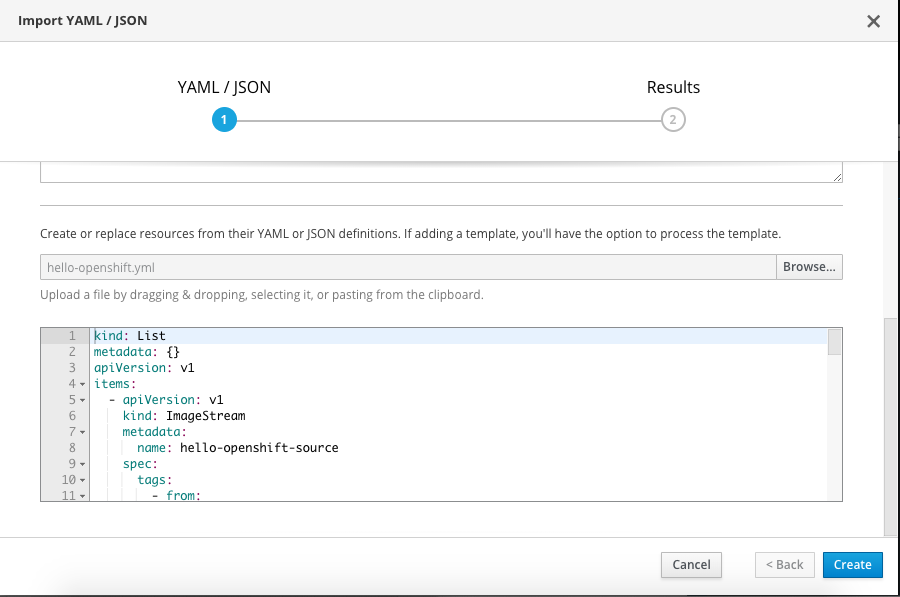

Let’s create a project and import my hello-openshift.yml build configuration:

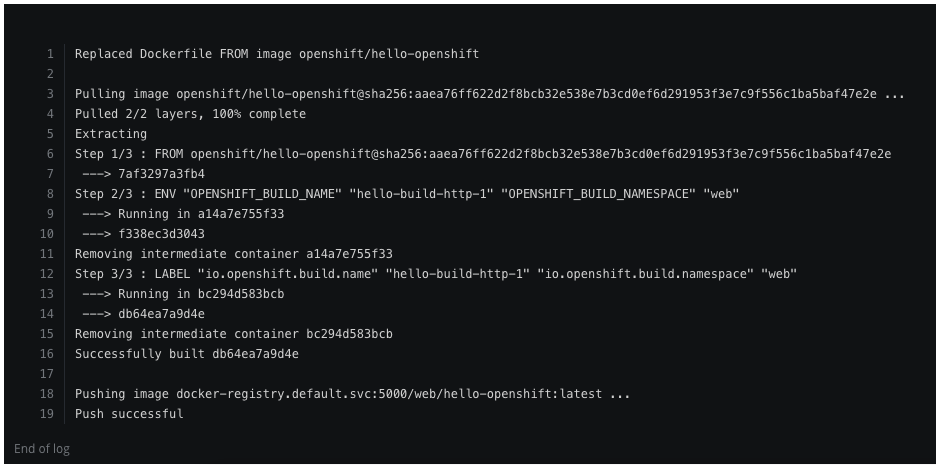

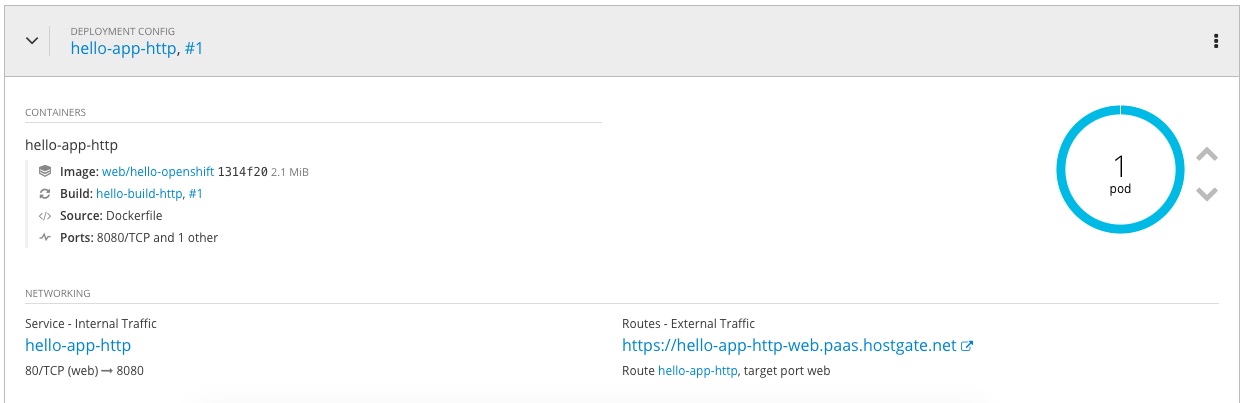

Successful completed the build and deployed the hello-openshift container:

My example hello openshift application:

When you are finished with the testing, run terraform destroy.

terraform destroy -force

hello again,

I am trying to create the environment but tf gives me an error that it can not find the ami?

i can try with a normal centos ami?

After that ..

I also would like to modify also the helper scripts to usr a RHEL 7.5 and a OCP trial subscription so i can install the enterprise edition.

If you change the region you need to change the AMI ID as well because both is linked.

look in the variables.tf

... variable "aws_region" { description = "AWS region to launch servers." default = "eu-west-1" } variable "aws_amis" { default = { eu-west-1 = "ami-6e28b517" } } ...-Bernd

Hello Bernd Malmqvist thank you for this tutorials.

After setting up the infra using terraform.

I tried running ansible as suggested using the below commands getting error below failed to connect host.

sudo ssh -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/bastion.pem -l ec2-user $(terraform output bastion) -A “cd /openshift-ansible/ && ansible-playbook ./playbooks/openshift-pre.yml -i ~/ansible-hosts”

Warning: Permanently added ‘ec2-54-186-30-62.us-west-2.compute.amazonaws.com,54.186.30.62’ (ECDSA) to the list of known hosts.

PLAY [Apply pre node configuration] ********************************************

TASK [Gathering Facts] *********************************************************

fatal: [ip-10-0-1-110.us-west-2.compute.internal]: UNREACHABLE! => {“changed”: false, “msg”: “Failed to connect to the host via ssh: Permission denied (publickey,gssapi-keyex,gssapi-with-mic).\r\n”, “unreachable”: true}

fatal: [ip-10-0-1-224.us-west-2.compute.internal]: UNREACHABLE! => {“changed”: false, “msg”: “Failed to connect to the host via ssh: Permission denied (publickey,gssapi-keyex,gssapi-with-mic).\r\n”, “unreachable”: true}

fatal: [ip-10-0-1-252.us-west-2.compute.internal]: UNREACHABLE! => {“changed”: false, “msg”: “Failed to connect to the host via ssh: Permission denied (publickey,gssapi-keyex,gssapi-with-mic).\r\n”, “unreachable”: true}

PLAY RECAP *********************************************************************

ip-10-0-1-110.us-west-2.compute.internal : ok=0 changed=0 unreachable=1 failed=0

ip-10-0-1-224.us-west-2.compute.internal : ok=0 changed=0 unreachable=1 failed=0

ip-10-0-1-252.us-west-2.compute.internal : ok=0 changed=0 unreachable=1 failed=0

[WARNING]: Could not create retry file ‘/openshift-ansible/playbooks

/openshift-pre.retry’. [Errno 13] Permission denied: u’/openshift-

ansible/playbooks/openshift-pre.retry’

It seems there is a problem with the ssh key on the OpenShift nodes, it says permission denied. Did you copy the private ssh key to the bastion host?

Hello, Thank you for the tutorial!

I had to modify some items become some regions don’t have 3 availability zones. Either way, I’m running into an issue with the bastion instance when I run the ssh command:

ssh -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null -i ./helper_scripts/id_rsa -l centos $(terraform output bastion) -A “cd /openshift-ansible/ && ansible-playbook ./playbooks/openshift-pre.yml -i ~/ansible-hosts”

bash: line 0: cd: /openshift-ansible/: No such file or directory

I got past by cloning and using scp to transfer the files.

It also seems as if ansible-playbook is not installed on the bastion.

Hi Daniel,

You need to wait a few minutes (~5mins) after you created the instances because the additional components are in the cloud-init config and this takes a bit time.

Best,

Bernd

Bastion prepare script fails unless below line is added in script:

sudo pip install –upgrade setuptools